Abstracts

Abstract

One of the most significant attributes of digital computation is that it has disrupted extant work practices in multiple disciplines, including history. In this contribution, I argue that far from being a trend that should be resisted, it in fact should be encouraged. Computation is presenting historians with novel opportunities to express, analyze, and teach the past, but that potential will only be realized if scholars assume a new research mandate, that of design. For many historians, such a research agenda is likely to seem strange, if not beyond the pale. There are tasks that fall properly within the domain of the Historian’s Craft and the design of workflows for digital platforms and expressive forms for digital narratives are not among them. In Section One, via the writings of Harold Innis, I make the case that a preoccupation with design is in fact very much part of Canada’s historiographic tradition. In Section Two, I present an environmental scan of emerging technologies, and suggest that now is an opportune time to revive Innis’ preoccupation with design. In the following two sections, I present the StructureMorph Project, a case study showing how historians can leverage the properties of digital form to realize their expressive, narrative, and attestive needs in the digital, virtual worlds that will become increasingly important platforms for representing, disseminating, and interpreting the past.

Résumé

L’un des attributs les plus importants du traitement informatique des données est qu’il a perturbé les méthodes de travail en vigueur dans de nombreux domaines, y compris l’histoire. La présente étude soutient qu’il ne faudrait pas s’opposer à cette tendance, mais plutôt l’alimenter. L’informatique offre aux historiens de nouvelles possibilités d’exprimer, d’analyser et d’enseigner l’histoire, mais ce potentiel ne peut être réalisé que si les chercheurs adoptent le design comme nouveau mandat de recherche. Un tel programme de recherche peut paraître singulier pour de nombreux historiens, voire excessif. Il y a des tâches qui relèvent du métier d’historien, et la conception de flux de travail pour outils numériques et de formes expressives pour les récits numériques en font partie. Le premier volet de l’article s’appuie sur les écrits de Harold Innis pour montrer que le souci du design est indissociable de la tradition historiographique du Canada. Le deuxième volet fait un tour d’horizon des technologies émergentes et avance que l’heure est propice pour raviver l’intérêt que portait Innis au design. Les deux derniers volets présentent le projet StructureMorph, qui est une étude de cas illustrant comment les historiens peuvent tirer parti des propriétés du modèle numérique en vue de combler leurs besoins d’expression, de narration et de démonstration dans le monde virtuel numérique qui deviendra de plus en plus important pour représenter, faire connaître et interpréter le passé.

Article body

In times past, recent, and alas not so recent, I have been accused of being a missionary. There is some justice to this charge because I am a digital historian. I wish more of my colleagues would become digital historians, and I am not shy in saying so. There is also some merit in this charge because I — like many missionaries — am asking colleagues to shift their attention from the domain of the familiar to the unfamiliar. More specifically, I am asking you, your department colleague in the neighbouring office, and anyone else who will listen to me to consider the question of design. For many of us, the questions associated with this issue — how we express; how we document; how we think; and how we fit into a workflow that supports dissemination of research — seem long settled. My purpose here is to argue that they no longer are. It is also to suggest that, whatever impression you may have of the field of digital history, the issue of design will play an increasingly central role in its evolution over the coming decades.[1]

The basis of this assertion is a brute fact: since the 1940s digital computation has de-stabilized extant practices in multiple fields, starting in the sciences. That process has spread to other domains of practice, and continues still.[2] My argument is that historians should encourage it. In the past decade, computation has presented users with new instruments for expression, new methods for content dissemination, and new formalisms for thinking about the past. Many of these methods are freely available.[3] As historians — individually and collectively — we should be mindful in our encounters with the tools that are being presented to us, as mindful as our humanist predecessors were in their encounter with the printing press.[4] Such mindfulness or, to use a more contemporary term, reflexivity will require addressing questions of design.[5]

In the first sense, “design” will need to connote volition. Historians will need to assess whether a given digital artefact either supports or detracts from their purposes, particularly their analytical purposes. In the second, “design” will need to refer to expressive practice. Here, scholars will need to answer a series of questions that begin with the words “should,” would,” “how,” and “what.” Should I appropriate multi-modal forms of expression? Would such an act enhance my expressive capabilities? If so, how can I best operate in a discursive environment populated by multi-modal expressive objects (text, 2D, and 3D content)? What expressive object or combination of objects do I use to represent a historical artefact, event, or scholarly narrative in a virtual world? How do I responsibly document a digital knowledge construct, and refer to previous work? None of these questions present clear solutions at the outset. All will require scholars committed to prototyping, testing, and disseminating solutions.

The core question the historical discipline will need to consider is whether such activities merit its participants’ recognition and time. For my part, I believe the answer should be a resounding yes. [6] Computation is presenting historians with unprecedented expressive and analytical opportunities, opportunities that are becoming ever cheaper and ever easier to access. Proper exploitation of these possibilities, however, will require effort.[7] My purpose here is to make the case for that effort, first by pointing to precedent. I will suggest that a preoccupation with design has roots in Canada’s historiographic tradition via the writings of Harold Innis, the political economist, communications theorist, and the 1944 recipient of the J.B. Tyrell Historical Medal.[8] In section two, I will present a brief environmental scan of emerging technologies as a means to illustrate the capabilities that will soon be open to historians. Finally, in sections three and four, I will present a brief overview of a project currently in process: the StructureMorph project. It will provide a case study of the kind of research that I suggest historians generally and digital historians particularly will need to undertake in near future.

I

When we think, we solicit the aid of form. More specifically, we, as historians, use formalisms to support our need for expression and analytical purchase. Without formalisms to structure thought and represent thought’s constituents, we would find ourselves lost in an ocean of percepts, looking at “one damn thing after another” as one wag was wont to put it. With formalisms — concepts, the logical syllogisms that give rise to them, formal methodologies, and signifiers — we begin to see the connections — relations — between one phenomenon and another. And with that sight we presume — rightly or wrongly — to see the constituents of causation. Our appeal to form, to theory, puts us in a position to explain, or so we think.

Now, when it comes to analysis, one might think that the injunction to exercise sound judgment while selecting a formalism for a given problem would be something that scholars would take as a matter of course. Unfortunately, the history of scholarship over the past century has often told a different story. Consider, for example, the widespread use of Null Hypothesis Standardized Testing (NHST), a statistical method widely used in the social sciences to establish causation. Researchers in psychology and cognate disciplines have been subjected to widespread criticism for the rote use of a method that is often, if not always, inappropriate for the task at hand.[9] A similar problem has attended the use of linear mathematical equations in the social sciences. There again, researchers have sometimes seemed more intent on using them as rhetorical devices to establish scientific credibility than as instruments to gain traction over a specific problem in a given field of study.[10]

In the 1940s, Harold Innis saw this latter tendency emerging among his colleagues in economics, and much of his writing over the last decade of his life was dedicated to combatting it. For those who have not been exposed to his writings, Innis is primarily known among Canadian historians for his promotion of the staples hypothesis: the proposition that the structures of the Canadian and American economies were different, and that those differences could be traced to the initiating stages of European economic activity that gave rise to each.[11] Innis is also known for multiple contributions he made in the 1940s to western and global historiography that were as sweeping in their intent, if not their scope, as anything written by Spengler or Toynbee. These works are important because they proved to be early contributions to the field of communications. In each, Innis asserted that communication technology, presently and historically, had had a bearing on how humans live, work and, most importantly, think.[12] Innis’ writings on economics and communications are worth re-visiting because they suggest his preoccupation with the question of design in the two-fold way I have described. Innis pressed his colleagues to ensure their formalisms aligned with their analytical purposes. He further pressed his colleagues to expand their expressive practices, to use modes beyond text and mathematics to represent the economic and cultural systems that concerned them. Let us see how.

Innis had nothing against mathematics per se.[13] What he opposed was the unthinking resort to instruments that contained latent assumptions about human system behaviour at odds with what he had discovered empirically. Here, the chief problem was the supposition that socio-economic and cultural systems are perpetually in equilibrium, and behave in a linear, mechanistic fashion.[14] Innis was never able to accept that premise. Human systems, he believed, were better conceived as spatio-temporal objects with punctuated histories. In “The Newspaper in Economic Development,” Innis pressed his colleagues for “a change in the concept of time,” one in which it was not “regarded as a straight line but as a series of curves depending on technological advances.” In an October 1941 letter to his colleague Arthur Cole, Innis expressed his preference for economic studies that stressed “the evolutionary (Spencerian) point of view” because such studies offered “a more solid footing than … the mathematical mechanistic approach.”[15] To capture the evolution of economic and cultural systems, Innis pressed for a mode of representation strikingly similar to the one used in the field of historical GIS (Geographic Information Systems) today, one that combined two formalisms: the times series, and the map.[16]

Their combination, Innis argued in a second letter to Cole later that month, had enabled him to gain greater purchase on the history and behaviour “of areas in which a given industry remains dominant over a long period — i.e., fur and fish.” Through a geographic approach to Canadian economic history, and through the interpolation of time series of staples and transport system price data (a time series is a measurement of an object’s quality — be it weather temperature, or commodity price — at equal increments of time, over a span of time), Innis believed he had made an important discovery. Canada’s economy over space and time had operated much like a weather system over space and time. Individual sectors of the economy devoted to wheat or other commodities had behaved much like weather fronts, starting in the east and progressively moving west. The factor precipitating change, punctuations in Canada’s economic history, had been transport costs. “As technological improvements in transportation emerged,” Innis wrote to Cole, “the production of geographic areas changed. For example the wheat belt moved steadily west and its place was taken by dairying or some other form of agriculture.”[17]

Innis’ purpose here and his other writings extended beyond an immediate need to reach a deeper understanding of human systems. He also pressed for the adoption of alternate and multiple modes of representation because he recognized that in any act of representation there is a latent Achilles’ heel. Signs represent, but they also distort and sometimes they conceal. Accordingly, in “On the Economic Significance of Cultural Factors,” Innis pressed his colleagues to appropriate novel forms of representation for the simple reason that it constituted good expressive practice. It mattered little what the object of representation was. It could be a social system. It could be a theological doctrine. Effective communication was best supported by the selection of expressive objects that permitted easy detection of the relevant message or pattern and imposed minimal or no cognitive effort on the part of its target audience. Such an aim presupposed the use of a wider array of expressive tools than scholars currently employed. It further presupposed consideration of modes such as information visualization.

For this reason, Innis urged his colleagues in economic history to recognize that their “subject is more difficult than mathematics and to insist that tools must be used, and not described, if interpretation is not to be superseded by antiquarianism.”[18] Responsible economists should warn against “the penchant for mathematics and for other scientific tools which have warped the humanities.” To replace those tools scholars should “provide grappling irons with which to lay hold of areas on the fringe of economics, whether in religion or in art …”[19] The significance of these two domains, Innis noted in other publications, was their repeated appeal to information visualization, the translation of text or numeric data, into a visual analogue, such as a graph, or a relief in a cathedral. For example, in his unpublished “History of Communication,” Innis writes that in the fifteenth and sixteenth centuries Italian artists repeatedly used visual forms of representation because of “the general inability” of the Italian populace “to conceive abstract ideas.” In the emblem book devised by Andreas Alciati, “Poetry, one of the oldest arts, was combined with engraving, one of the newest. ‘Emblems reduce intellectual conceptions to sensible images and that which is sensible strikes the memory and is more easily imprinted on it than that which is intellectual’.”[20]

It is in these early communication contributions that we see the roots of much of what Innis had to say in his celebrated anthologies, Empire and Communications and The Bias of Communication. During the last decade of his life, Innis enjoined his colleagues and contemporaries to ensure their modes of thought and practice did not settle into an exclusive orientation either towards space or time. In Innis’ parlance, such a state was a monopoly of knowledge, and it was a recipe for disaster, as it hindered observers from seeing much that was essential in the operation of human systems.[21] Such disasters could only be avoided by the mindful use of the tools and cognitive and expressive formalisms we use to see our environs, past and present. When Innis wrote articles, such as “A Plea for Time,” he was to be sure arguing that it was imperative for scholars and institutions to remain cognizant of the dimension of time.[22] But it can equally be argued that he was making a plea for the purposeful adoption, creation, and retention of tools that would direct our attention towards both space and time. He was, in short, making a plea for design.

II

Now, Innis’ prescriptions are all very well, but do they amount to anything more than intellectual history today? One could argue that the technological ground was shifting even as Innis made his programmatic calls in the 1940s. The first generation of digital computers were already under construction, and in the decades following computing applications have emerged that address the needs specified by Innis, GIS being one example. In this line of reasoning, the market appears to have anticipated all or most of historians’ traditional needs, and even the outliers indicated by Innis. There is no reason, then, for historians to insert themselves into the digital design process.

Such an argument is possible, but over the long term I do not believe that it will hold. Why? To start, for many of us the plausibility of this argument stems from our exposure to widely used applications such as word processing and e-mail which have nicely supported all or most of our needs for research and scholarly communication. Fair enough, but it should be noted that these items and related classes of applications such as database software were designed to treat two things: text and number. Further, they were conceived in a print-based culture where user requirements for manipulating text and number were long known and well understood. The same cannot be said for software that treats expressive objects with two-, three-, and four-dimensions. There, one is more likely to find a gap between what vendors provide and users want. Take the example of GIS cited above. GIS is software used to discover spatial patterns in data, such as immigrant patterns of settlement (diffuse or clustered) in a given city. While it has powerfully supported user communities in industry, science and the social sciences, it has only partly met the needs of historians and historical geographers. These two communities routinely complain that the software’s primary orientation is synchronic, not diachronic. GIS is generally poor at tracking the evolution of social and environmental systems over time. [23] A second complaint is that GIS, given its origins as an application to support science research, is primarily designed to support quantitative representations of space. It does little to help scholars interrogate historic, qualitative constructions of space.[24]

The issues posed by GIS are emblematic, I think, of a problem that historians committed to computational methods will face for some time: minding the gap. One could argue that the private sector will quickly rectify the gaps scholars discover, but the example of GIS suggests otherwise. Scholars have pressed vendors such as ESRI to enhance their products’ capacities to treat and represent time, to minimal effect.[25] Our needs, unfortunately, often lie on the periphery of what ESRI’s customer base wants, and the company, understandably, is more interested in attending to it than us. That market reality suggests that in near to medium future, digital historians should not assume their needs will align with a ubiquitous set of requirements typical of most users in most domains. Instead, they will need to adopt an ethic of tool modification.

Tool modification in this context will mean three things. Its first connotation will mean the creation of new software from scratch. I will not say much about this option save to note its availability and difficulty. In this scenario, a historian acquires the skills of a computer programmer and creates, for example, an open-source version of GIS, one that is placed online for others to correct and improve.

Tool modification can also refer to the modification of extant tools. Here, happily, private sector companies have often proven more obliging, by permitting users to bridge the gap between software capability and user need via modifications known as plug-ins. A plug-in, defined simply, is a module of code that one can import into an existing tool to give it new capabilities. I typically, for example, create 3D models using SketchUp. I might in a certain circumstance want to modify a 3D model created in another package, such as Cinema 4D. To meet that contingency, I would search for a SketchUp plug-in that supports importation of Cinema 4D objects, and then install it prior to beginning work.

Tool modification can finally refer to the act of convergence, the act of aggregating two or more tools into new combinations and, in their sum, creating a new tool.[26] One can profitably think of tool convergence as a twenty-first century version of The Oral Tradition as described by Harold Innis. In his writings, Innis characterized Greek poetry and Hebrew biblical texts as the product of pre-existing lays that were combined and re-combined into new constructs; those actions enabled the Greeks in particular to create new genres of poetry and interpret events in their environs — particularly in nature — in new ways.[27] The smart phone is a more contemporary example of tool convergence in action. It is the sum of multiple tools that have been integrated to enhance user capacities, including the video monitor, the QWERTY keyboard, the camera and, lest we forget, the phone. Tool convergence, in short, will enable historians to address shortcomings in their expressive and analytical capacities, and to explore and see the past in novel ways.

Scholars will need these strategies for minding the gap given that a brief environmental scan suggests that in the short-to medium-term, the number of gaps humanities scholars are likely to face will scale in size and complexity. The basis for this suggestion comes from a Compute Canada meeting I attended in 2012 where researchers representing the sciences, social sciences, and humanities were asked a simple question: what are your future requirements for content visualization? To my surprise, the answer that emerged from colleagues across the spectrum of the disciplines was remarkably similar. Researchers sought computational power and applications that would permit the simultaneous visualization of content at multiple scales of spatial organization, ranging from the molecular and microbial to the planetary and galactic. They further sought applications that would permit the simulation of multiple temporal increments, ranging from the nano-second to the millennium and more.[28]

Now, there is no reason in principle why scholars could not build a platform that would meet — or move substantially in the direction of attaining — the vision of computationally-intensive, spatio-temporal visualizations I have just outlined. To function in such a context of computation, work, and expression, scholars’ first adaptive strategy will need to be tool convergence. They will need to bring together some or all of the following applications:

-

New Paradigms for Computing

-

High Performance Computing (HPC) — High Performance Computing is a paradigm of computing that affords computational power orders of magnitude larger than anything we can obtain on our desktop. Computing clusters — such as those hosted by SHARCNET at McMaster and Western Universities — provide this scale of computing by networking thousands of processors. By the end of the decade, the first exa-scale HPC systems will come online, capable of effecting one quintillion calculations on one quintillion bytes per second. In concrete terms, HPC can support simulations of complex systems with billions of constituents, ranging from galaxies to the human cell. HPC offers the most immediate prospect for providing the computing power necessary to support the visualizations described above.[29]

-

-

Applications for Content Expression, Pattern Detection, and Analysis

-

Game Engines — Game Engines, at least at the start, can be usefully characterized as “word processors” for Virtual Worlds. Applications such, as the Unity game engine, support the creation and display of multi-modal content (text content, 2D, 3D/4D, audio content, and so on). They are important because they enable users not only to appropriate and integrate multiple types of content, but also to specify the resolution of that content. In the context of spatio-temporal virtual worlds, content resolution refers to the degree of abstraction versus detail used in a given representation of a space. In some circumstances, detail is a hindrance, and scholars opt for iconic and cartographic representations of a given locale. In other circumstances, they will want a representation rich in topographic, structural and photo-realistic detail. Game Engines support the creation of knowledge artefacts that shift sequentially from one degree of content resolution to another. They also support the concurrent representation of the same space using two modes of representation with two different degrees of detail, such as a map and 3D model of a given city.

-

Geographic Information Systems (GIS) — GIS software presents a suite of tools designed to support pattern detection in data. It is at once a spatial database and visualization tool, one that combines series and time series of attribute data — such as ethnicity — with spatial data — such as an address. Through its tools a research can trace, for example, the spatio-demographic profile of a city, determining if and where a given ethnicity has clustered in a given city.

-

Agent-Based Simulations — Agent-Based Simulations (or ABS) are emerging as an important complementary application to GIS in the social sciences. While GIS is useful for discovering patterns in data, ABS are useful for assessing hypotheses explaining their appearance, in large measure because they are designed to simulate the behaviour of the social systems that gave rise to those patterns. ABS have been used to mimic populations scaling as high as one million people. In the historical and computer sciences, they have been used to explore questions ranging from the circumstances behind the disappearance of the Anasazi to the reasons for Nelson’s victory at Trafalgar. Unlike simulations that rely on linear mathematical formalisms, ABS explicitly model the heterogeneity of the populations they represent.[30]

-

-

New Paradigms for Content Dissemination

-

Google Earth, or an Open Source Variant of Google Earth — Google Earth operates much like a globe, but one with enhanced capacities. It is designed to display content, be it natural topography or human activity, at multiple scales of spatial organization. It is also capable of displaying multi-modal content, be it cartographic, photographic, or topographic.[31]

-

The Geo-Spatial Web — When one thinks of the World Wide Web, the metaphor that often comes to mind is a two-dimensional web with multiple junctions or nodes. At each node resides a website where one or more pages are available for users to view. In the 1.0 version of the web, pages at one node or site were connected to content at a second site via hyper-text links. The 2.0 version brought extensibility. Extensibility refers to the capacity of one website to appropriate content from another. XML (Extensible Markup Language) and XML technologies (such as XPATH and XSLT) afford a given webpage the capacity to take an extract of text from another — for all intent and purpose a block quote — reflecting content specified by the programmer. They further permit the given website to re-format the appropriated text, again along the lines specified by the programmer. In all versions of the web, the governing frame of reference for content searching, content acquisition, and web navigation is in large measure determined by semantics, the use of keywords which constitute the meta-data, the subject headings of web pages.[32]

The Geo-Spatial Web will be a new iteration of the web. It will not efface the web we know. It will instead be a complement, one that offers new capacities for content requisition and dissemination. To start, it too will be populated with the genre of digital globe we currently associate with Google Earth. Multi-modal content will be displayed at each site, and it will be extensible to neighbouring digital globes. The “browsers” we use to survey these sites will be game engines or extant browsers that have evolved to mimic the behaviours and capabilities of game engines. In this iteration of the web, searching will be supported not only by semantics but also by geographic coordinates. Computer scientists here are seeking to enable researchers to harvest all content associated with a given locale, and even more specifically, a given set of geographic coordinates. Latitude, longitude, and UTM coordinates will become normal constituents of meta-data for content placed on the Geo-Spatial Web.[33]

-

Now, the above scenario likely suggests I watch too much science fiction. That charge is true, but it does not negate the scenario’s purchase for it is based on extant or soon-to-be realized technology; the imperative to cope with Big Data; and contemporary statements of scholars who are realizing the need to converge two or more of the tools listed above. With respect to Big Data, the central issue is that researchers in multiple domains are now enjoying an embarrassment of riches. Computation and the Internet together have produced more online accessible data than researchers in fields ranging from astronomy to history will be able to treat unaided in a lifetime. The only viable way to meet this challenge is through the use of more computation and computing applications devoted to visualization and pattern detection.[34] With respect to contemporary scholars, one can point to recent statements calling for the integration of game engines and, more generally, virtual worlds with GIS,[35] agent-based systems with GIS,[36] and Google Earth with GIS.[37] Private sector firms such as ESRI are recognizing the trend as well. In recent versions of ArcGIS, for example, one can locate a digital globe akin to Google Earth. Further, via its CityEngine plug-in, users of the firm’s cartographic software can rapidly generate photo-realistic virtual worlds. Some variation of the scenario above will be realized once researchers obtain the expertise, funding, and computational power to build it. Indeed, some are trying now.

III

This scenario constitutes a plausible way to adapt to the challenges and opportunities afforded by contemporary computing. I will not presume, however, to label it a necessary response. Historians collectively do not have to embrace computation. What I will presume to suggest is that such a multi-modal, multi-application, computationally powerful platform would enable scholars to express content and treat it in ways we currently cannot. And for that reason, I will also presume to suggest that historians — collectively — give that prospect a hard and deep consideration. I also presume to so state because our colleagues in cognate disciplines such as anthropology and archaeology have been far more active in appropriating computing than we have, and it strikes me as merely prudent to consider why that might be so. Harold Innis, hard boiled economist that he was, was not above bringing his discipline into conversation with theology. Perhaps we might also consider the doings of the neighbours next door.

If we so do, and over the coming years a plurality or majority of historians begin to integrate computing more deeply into their practice, I believe the strategy of tool convergence will constitute an important way to engage with computation. It will not, however, be the only strategy. For the purposes of this article, the scenario above is useful because it provides a structured context to consider the design problems posed at the start of this study. In a multi-modal platform with the tools specified above, how will we construct a narrative? How will we express and document content? What kind of workflow will we use to support publishing, peer review, and so on? Doubtless, some of these questions will be resolved by a process of appropriation. History will not be the only domain of practice to face these issues and historians may find solutions from unlikely allies, such as the construction industry. Sometimes, there is no need to re-invent the wheel. We will find ourselves in a situation akin to François Rabelais, who routinely canvassed the Paris market in search of novel forms of expression that eventually made their way into Gargantua and Pantagruel, or so Mikhail Bakhtin informs us.[38]

In other circumstances, design questions will need to be solved via a process of creation. While we are engaging in tool convergence, we will concurrently need to engage in the creative act of tool modification. We will need to modify the applications and expressive forms we are incorporating into our multi-modal platforms and workflows. My purpose in this section is to consider how the strategy of tool modification might be accomplished, using the StructureMorph project as a concrete frame of reference.

The StructureMorph project is one initiative associated with Montréal, plaque tournante des échanges, a SSHRC Partnership Grant initiative exploring Montréal’s historic role as a hub for the inflow and outflow of people, goods, and knowledge. Its purpose is to support the initiative’s aim to create a 4D model of the city.[39] The project was originally conceived in a thought experiment I presented to colleagues at an August 2009 Institutes for Advanced Topics in the Digital Humanities workshop hosted by the National Center for Supercomputing Applications in Urbana, Illinois. The aim of the workshop was to determine historians’ requirements for an HPC-supported virtual world, much like the one specified above.[40] In my presentation there, I made the case to my colleagues that we would need to narrow the terms of the thought-experiment considerably to proceed. We could not hope, for example, to specify requirements for the discipline as a whole, and for that reason I suggested we narrow our focus to two domains: social history and architectural history. Focusing on these fields made sense, I suggested, as both have been among the most active in history, with respect to appropriating computing, and with respect to specifying their requirements for the same. The terms of the thought experiment was further narrowed by focusing on one scale of space: the urban level of spatial organization. I finally narrowed the scope of the workshop by focusing on the implications of converging just two applications: the game engine and GIS.

Once the terms of our exploration were settled, at least initially, I next suggested that a great deal of thought would need to be expended on the properties of buildings in virtual worlds, a discussion I will continue here. The basis for the conclusion offered in Urbana was the recognition that social and architectural historians would be asking a great deal from buildings as expressive objects in the HPC-platform we conceived. For social historians, the digital building will likely fill the role performed by 2D polygons in GIS today. There, polygons perform two crucial functions. They first of all tie census and other forms of demographic data to a spatial location. You, the historian, create that tie first by creating a 2D polygon, usually by tracing the outline of a building or property on a map using GIS tools that support polygon creation. You then connect that polygon to a line of data, again using tools in GIS designed for that purpose. Once you have created multiple polygons, and multiple ties to lines of data, you are then in a position to perform the second polygon function in GIS: information visualization. You can use the map and attendant polygons to reveal patterns embedded in your data, using the dimension of space to structure your data.

That property can be used, for example, to explore the demographic profile of a neighbourhood. The historian can take an array of colours and assign one to each ethnicity represented in the given neighbourhood or set of neighbourhoods under examination, using red for English, white for Chinese, blue for Jewish, and so on. The same historian can then generate a map in which each polygon is coloured to reflect the ethnicity of its residents. The resulting map, in its sum, enables the researcher to identify patterns of settlement for a given ethnicity, determining, for example, whether the specified group is distributed evenly throughout the space under examination or whether its members, for whatever reason, are clustered during the point in time under scrutiny.[41] For social historians in the conceived platform, then, 3D/4D buildings will need to perform a similar function to 2D polygons today: they will need to express a relationship between a given building and the activities and attributes that were associated with it.

For architectural historians, however, these same objects will need to express a different set of relationships, namely those existing between the model building and the data that gave rise to it. More specifically — if the literatures stemming from architectural history, theatre history, archaeology, and virtual heritage are any indication — scholars will require an expressive object that reveals itself to be a mediated object, one that refers to a referent that existed in space and time.[42] It will, accordingly, need to meet the following user requirements:

-

It will need to visually inscribe degrees of confidence on building constituents — Scholars are keenly aware that 3D models of historic structures are imperfect, mediated things that are the product of data and inference. They are further aware that photo-realistic representations of structures can communicate a false message: that the presented model is not a hypothesis. For the naïve and not so naïve viewer, the scholar is often seen to “know” that the historic structure looked the way it did, the way it is presented via the model. This, of course, is nonsense. There has been a growing recognition in multiple digital humanities literatures that symbolic modes of representation need to be employed to differentiate model constituents, to show which sections of a structure are based on real-world data, which sections are based on appeals to architectural context, and which sections are based on inferences to basic engineering principles or laws of physics.[43]

-

It will need to display the life cycle of heritage structures in an economic and intuitive way — One of the key challenges of representing content in virtual worlds has been the inclusion of the fourth dimension: time. In the 1990s and for much of the last decade, 3D model formats such as VRML could not accommodate time. If you wanted to show the evolution of a structure over its life cycle, you had to follow one of two expressive strategies: succession or juxtaposition. Succession in principle is a great strategy. The problem is that each time you introduce a new iteration of the given model you also have to re-render the entire virtual world surrounding it, a task that is time consuming. The second strategy — juxtaposition — is also a perfectly viable strategy. But here you are required to remove a model from its urban context in order to display each iteration of the given structure in sequence. What scholars need is a mode of expression that will support the transformation of a given model without re-rendering everything beside it.

-

It will need to possess expressive modes that support proper documentation — Finding ways to properly document scholarly digital models has been another fundamental challenge faced by digital scholars over the past 20 years. The problem, in essence, is that we have no common standards for documenting a presented model: we do not know how to footnote a building. Still, despite the lack of a common framework, two common themes have emerged in the digital history, virtual heritage, and digital archaeology literatures. The first is the familiar principle of documentation according to provenance, as we find with print-based knowledge objects. We need to know what data you used to generate your model, and where you got it from. The second requirement is documentation of workflow. Here, the imperative will be to show the thinking and decision-making that gave rise to the model structure in its final form. One important constituent of that accounting will be the display of prior versions of the submitted artefact. The individual or team will need to show the models they created prior to the creation of the model iteration submitted for display. It will be akin to submitting prior drafts of a written manuscript as well as its final version. A second important constituent of that accounting will be to display versions produced by other scholars elsewhere. Emerging documentation requirements, in short, suggest that a digital model will not be a representation of a single version produced at a given time. A digital model will in fact rest on multiple versions, each available for interrogation and display.

To meet these requirements, the StructureMorph Project is investing its time and focus on developing a category of expressive object that is gaining greater currency in the digital humanities: the Complex Object. There is no fixed definition on the qualities associated with Complex Objects, but among other things it is conceived as a hierarchical construct of many parts, a useful feature for scholars interested in representing the multi-constituent object known as the building. It is also a heterogeneous object. It is not based on one mode of representation such as text. It is rather an amalgam of different expressive forms ranging from text to audio, 2D animations and 4D representations.[44]

For the project’s purposes, however, the Complex Object is perhaps most valuable as an object to think with, as some scholars have put it. It certainly forced project participants to systematically consider how digital building set in digital worlds could be deployed to meet the user requirements specified above. But it also presented us with the opportunity to challenge our latent conceptions on what constitutes a signifier, and how it operates. Typically, we tend to think of signifiers — be they letters, musical tones, or other things — as discrete objects that exist in a single state. We generally think about the relations between one signifier and another in terms that derive from classical conceptions of space and time. Accordingly, a sign is seen as one “thing” or another, and the relationship between one sign and another is determined by rules governing juxtaposition (space) or succession (time).

As we considered our requirements, however, we recognized we wanted an expressive object with behaviours analogous to objects existing at the sub-atomic level. There, an object is not conceived as a single thing. It is conceived as a suite of potential things that co-exist simultaneously. An electron, for example, is seen as being at once a wave and a particle. These two potential “things” that are the electron maintain a relation that is neither succession nor juxtaposition. Their relation is known rather as superposition. The electron’s resolution — or collapse — to wave or particle is not determined by a spatial or temporal grammar, but rather by viewer interaction and, more specifically, by the viewer’s need to see it as a wave or particle.

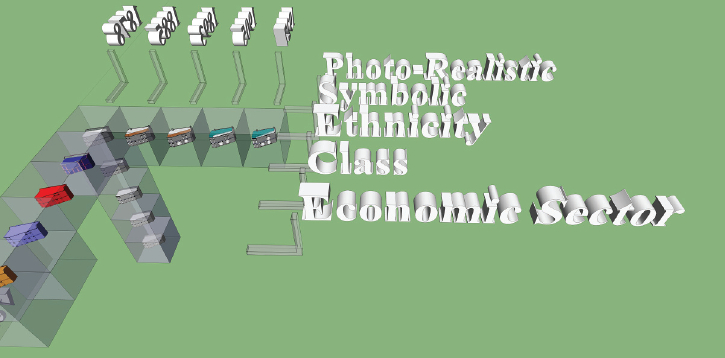

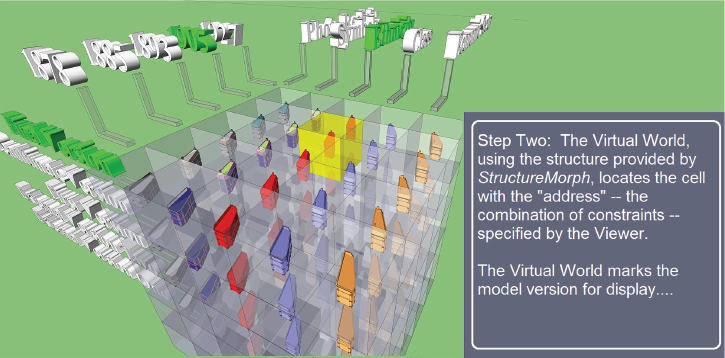

Now how would such a Complex Object — a 4D building that behaves like an electron — meet the requirements of architectural and social historians? Its capacity to be more than one thing at once would allow it to respond to the multiple demands placed upon it. In one instance, it would be asked to function like a 2D polygon. Because its occupants were English, the structure would shift from a photo-realistic representation to one where the entire structure is red. In another instance, in a scholarly treatment of the building’s life cycle, the structure shifts from its appearance in 1878 to its appearance in the 1920s. Our Complex Object will have the capacity to be more than one thing at once because it is conceived not as a thing but rather as a three-dimensional matrix of things. (See Figure One)

The matrix will contain multiple three-dimensional increments, or cells. Within each cell, you will find a version of the 4D model. If we were to think of our electron analogue in these terms, you would find a particle in one cell, a wave in the next. The characteristics of the given version will be determined by the location — the address — of the cell in which it finds itself. That address will be specified by its position relative to the three axes in the matrix. Each axis in the matrix will specify one of three things about the model:

-

Its temporal state — Each increment along this axis will indicate a change in a structure’s shape or surface appearance over the course of its life cycle (indicating significant dates in a building’s life cycle, which, in our example in Figure Two, included 1878, 1885, 1893, 1905, and 1927). (See Figure Two)

-

Its expressive state — Each increment here will tie the structure to a specific data set, a specific ontology, and a colour scheme aligning specific colours with categories in the ontology. Ontologies represented here will encompass everything from degrees of modeler confidence in building constituents to demographic categories (class, ethnicity) to economic sectors. (See Figure Three)

-

Its interpretive state — Increments along this axis will refer to either the final version of the model, or prior versions of the model produced by the model contributors (such as the penultimate and first versions), or other scholars elsewhere. (See Figures Four and Five)

Figure Two

Figure Four

Figure Five

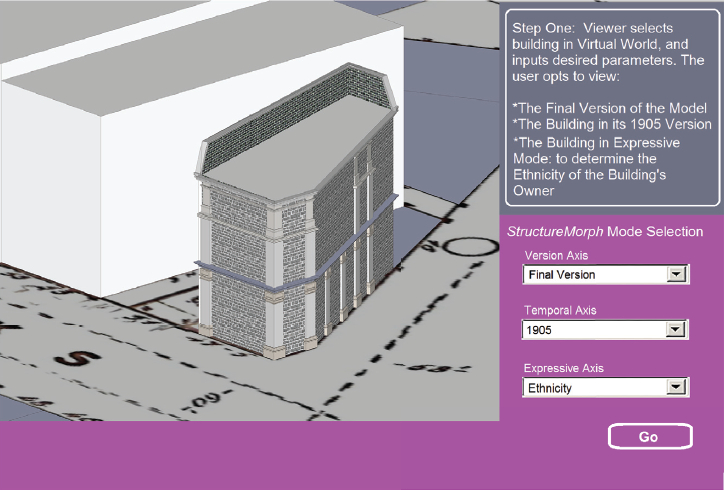

Model expression will be determined by changes in virtual world-time or user interaction, promptings which will lead to the expression of one of the potential versions available. The Complex Object will, so to speak, “collapse” upon user or computer prompting to show one cell or increment. The cell or increment that is activated will depend on the characteristics — the parameters — specified by the given viewer. The viewer will tell the virtual world what he or she wants to see. The Complex Object will reply by activating the cell and model version with the “address” that matches the combination of parameters specified by the viewer. (See Figure Six)

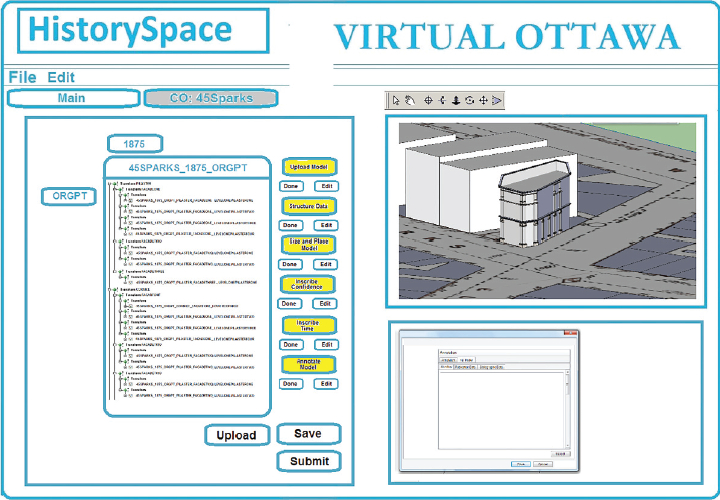

To build such an object, one could of course create a program to so do. The drawback to such a solution is that it requires the contributor to code. Happily, programming is not the only method available for Complex Object creation. It is possible to conceive a tool-based method that rests on the day-to-day capabilities of most users — such as the capacity to drag-and-drop objects— and known, easy-to-use interface conventions — such as drop-down menus. These two things can be harnessed to enable easy construction of Complex Objects, easy specification of the behaviours, and automatic joining of 4D models with their attribute databases. That is precisely what we are planning to do with a modification of the Unity game engine known as StructureMorph. Through this plug-in, we believe it will be possible to generate Complex Objects using an interface much like the one shown in Figure Seven. The tool and interface will support a six-step workflow devoted to the following:

-

Step One: Uploading 3D model version to Virtual World

-

Step Two: Structuring 3D model data

-

Step Three: Scaling and Positioning model in Virtual World

-

Step Four: Inscription of Confidence on 3D model constituents

-

Step Five: Inscription of Time Stamp on 3D model constituents

-

Step Six: Annotation of Model

IV

There is neither time nor space here to provide an in-depth description of how each step in our proposed workflow will be implemented in StructureMorph. What I would like to offer instead by way of succession is a brief discussion that makes the operation of the Complex Object more concrete. I have argued, through an appeal to Harold Innis, that thoughtful appropriation and design of expressive and analytical instruments are essential to good scholarship. I have further argued that, given contemporary trends in computing, historians would do well to re-visit the question of design, and that the convergence of multiple applications and the concurrent creation of novel expressive forms offer two plausible responses to the challenges and opportunities afforded by computing. There is, however, much abstraction in that prescription. How would this appeal for design — this appeal for Complex Object creation — impinge on the actual execution of scholarship?

Figure Seven

One might consider this question in the context of domains such as architectural history, digital archaeology, and urban history. There, fulfillment of Steps One and Two of the workflow above would provide a powerful way for scholars to make reference to previous work. Their completion would not only involve a given scholar transferring one or more versions of a given structure to a target virtual environment. It would also involve appropriating outside versions of the modeled structure from neighbouring Geo-Spatial globes, and re-structuring the Scene Graph of imported models (the underlying structure of a model that divides a building model into separate constituents) to bring them into conformance with models already extant. All three tasks will need to be completed to fill the interpretive axis of the project’s Complex Object.

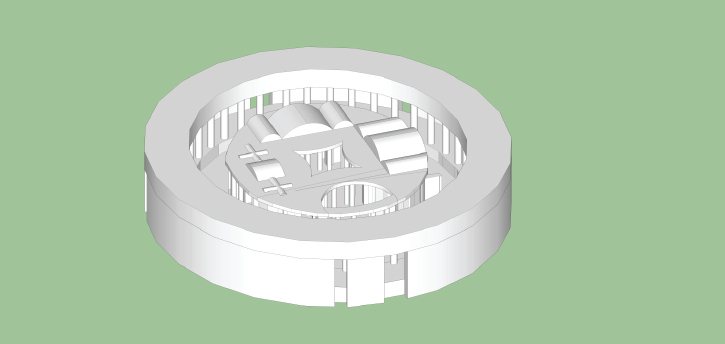

The importance of performing these three tasks — and expressive power in so doing — becomes clearer when we consider scholarly projects such as those devoted to re-constructing Hadrian’s Villa. Hadrian’s Villa was built in the second century CE for the Roman emperor Hadrian, who disliked living in Rome. As a site, it has provoked the fascination of architectural historians and classical scholars because Hadrian made a conscious effort to create structures that represented the cultures of multiple domains in the Empire. The site has also been the focus of at least five attempts to reconstruct its appearance, with the most recent originating in Germany, Italy, and the United States. One part of the complex that has generated particular interest is the Maritime Theatre, where the emperor used to repair when he wanted to be by himself. The entire complex was surrounded by a moat and drawbridge which Hadrian used to hinder contact when he so chose. (See Figure Eight) One interesting byproduct of the scholarship on this structure is that reconstruction teams have reached very different conclusions regarding the probable appearance of this structure. The German reconstruction of the roof, for example, shown on the top of Figure Nine, is very different from its Italian counterpart, shown on the bottom.

Any new effort to reconstruct Hadrian’s Villa will need to engage these previous efforts, and indicate why the new reconstruction differs from those that came before. In the context of our digital platform, such an explanation will require appeal and display of previous models of the Maritime Theatres. Scholars affiliated with the new initiative will also need the capacity to highlight and remove constituents of the given model over the course of the comparison, to draw viewer attention, to take one example, of each roof’s version. It will also require versions that are structured — sub-divided — in the same way, to make them directly comparable. The Complex Object as conceived will meet these requirements.

Figure Nine

Graphics: John Bonnett

Creating the tools and means to complete Step Two, however, will not be a trivial task. As I noted above, the Scene Graph is what provides the underlying structure of a given model. In principle, you can generate a model from a computer code containing a single list of numeric points in three-dimensional space. In practice, it is better to create a model that divides those numeric points into containers — or nodes — and arranges them in a tree-like structure. In their sum these nodes make the Scene Graph. If one thinks of a model as a three-dimensional essay, the node can be seen as one of the paragraphs comprising the essay. Now, each node can be made to correspond with a given building constituent, such as a pilaster or upper cornice. But here, we can anticipate an important problem when it comes to the issues of model comparison and Complex Object construction. There is no reason to presuppose that one scholarly team will structure their model of Hadrian’s Villa, or anything else they happen to be making, in the same way as counterparts located elsewhere. To enable scholars to effectively compare multiple models, we need a three-dimensional analogue of RDF, XPATH and XSLT technologies, which reformat foreign texts to meet the need of a host site. We need a solution that will enable a scholar to restructure the Scene Graph of an imported model.

To meet that need we plan to develop a tool that will enable scholars to create new nodes, split existing nodes, and take extant nodes and put them in parent and child relationships. But the fact is that we are likely going to need something even more fundamental. Every 3D model can be characterized as a combination of points, lines, and polygons. These three things can be considered the “words” of 3D models. They are the primitives that modelers use to make larger constructs. Digital historians are going to need a set of tools that will provide them the fine-grained control required to disassemble the existing relationships of 3D model primitives and, in turn, to combine them into new associations, new nodes. The process will be akin to opening a Word document and revising its entire paragraph structure. The underlying “words” in our 3D model are the same, but the underlying paragraph structure will be different. With respect to 3D models, there are few applications that provide the fine-grained, intuitive degree of control over imported structures that I am specifying here. The best application that I’ve seen is one that has been orphaned since the 1990s, Cosmo Worlds. It supported the integration of points, lines, and polygons into new combinations and even supported the bifurcation and extrusion of individual polygons. It was a tremendous tool and I have repeatedly mourned its loss over the past 15 years or so. My programming partners at Edge Hill University and I will be canvassing libraries in the near future to search for code that will enable us to reconstruct the suite of tools found in this impressive software.

We will also be starting projects that support ends that extend beyond Complex Object creation. Our ambition via the HistorySpace project is to design and prototype two other objects that we believe will be fundamental to the operation of Virtual Worlds: Narrative Objects and Documentation Objects. The challenges of so doing will again not be trivial. We have had to learn, and are continuing to learn, how to identify exactly what we want and need from digital platforms. In the case of Narrative Objects, for example, we have learned to conceive narratives as sequences of events, a conception where the sequence and duration of each event is specified. To do so, we are exploring the use of a solution that is not novel but is still powerful: the musical score. Every event, and every transition between an event, is conceived as a “note,” while predefined spaces are divided into octaves. We are further exploring the use of forms as diverse as hubs-and-spokes, petri-nets (a formalism from mathematics and construction), and literary constructs such as the sideshadow.[45]

V

For historians, there is little that is familiar here. I have asked you to consider the possibility that our discipline should incorporate design into its research activities. Throughout, my burden has been to bring the agenda and tasks that I have proposed into the domain of the familiar, a task that I have undertaken first by referring to the writings of Harold Innis. There, within Canada’s historiographic tradition at any rate, we saw that the preoccupation with design is not new. Throughout his career, Innis sought a deeper understanding of the emergent, complex systems that constituted Canada’s past and present. He believed that understanding could only be purchased through careful attention to the modes of representation that we use to signify and explore economic and cultural systems. To assist his contemporaries and successors in that task, Innis proposed scholars explore new modes of representation, like information visualization, and new modes of construction, such as those stimulated by the Oral Tradition. Such reforms were necessary, he believed, because the systems that concerned him were spatio-temporal objects. Such reforms were necessary, he argued, because they would ensure research cognizance of the dimensions of space and time.

In the succeeding sections I made the case that it is now time to revive this latent feature of Canada’s historiographic tradition. I made that case through an environmental scan which suggested that current computation is presenting historians with important challenges and opportunities. We face the challenge of Big Data, to start, a challenge that will continue to increase as more and more primary sources are digitized online. We face the opportunities presented by multiple applications that will enable us to detect important socio-economic and cultural patterns and, via agent-based simulations, test hypotheses to explain their emergence and persistence. To meet those challenges, I suggested scholars apply Harold Innis’ concept of the Oral Tradition by converging multiple applications ranging from Google Earth to GIS and High Performance Computing.

I further suggested, however, that the platforms emerging from such convergences would present challenges — gaps — in their own right. There will be a gap between what such platforms can initially do, and what historians want with respect to expression, narration, and documentation. To bridge those gaps, particularly those relating to expression, I presented a case study — the StructureMorph Project. It is a project currently in development, and for now remains nothing more than a thought experiment. But the value of that thought experiment, I suggest, is that it shows a way that historians can conceive their requirements for digital platforms, and then leverage the properties of computer-generated form to fill them. In so doing, my purpose was to show that a design-oriented research agenda would present problems that are intellectually challenging, and for some, problems that are interesting as well. My purpose was secondly to show that such a research agenda has value. The digital turn presents interesting possibilities for historians, but they will only be realized if some of our members devote the time, labour, and thought required to that potential into expressive and analytical instruments that can be used by the profession at large. My final purpose has been to show that it is possible to conceive expressive instruments that are consistent with the user requirements specified by Innis during the last decade of his life. The Complex Object is a four-dimensional instrument that will, when realized, direct viewer attention to the dimensions of space and time.

So, while there is much that seems foreign here, I hope that I have shown a research agenda that supports purposes long familiar to historians. Those of us committed to a research agenda devoted to design want to tell a good story, one that goes beyond mere recounting and reaches the level of art. We want to present an analysis, one that pays due respect to the work of our predecessors and clearly and accessibly displays the new insights we have to share. And we want to show that we have good reasons for the knowledge claims we are offering to colleagues and contemporaries, documentation that will show what we have done and provide a basis for correcting what we should have left undone. We want, in the end, knowledge constructs that are the product of sound and thoughtful design. To make them, I have argued that historians must embrace the unfamiliar. We should ascertain what we want and appropriate, or create, the forms we need to do what we want. It is not easy work, but I like to think that Harold Innis would have approved.

Appendices

Biographical note

JOHN BONNETT (History, Brock University) is an intellectual historian and Canada Research Chair in Digital Humanities. He is the author of Emergence and Empire: Innis, Complexity and the Trajectory of History, a work awarded the 2014 Gertrude K. Robinson Book Prize. He was the principal developer of The 3D Virtual Buildings Project, an initiative that uses 3D modeling to develop student critical thinking skills in the history classroom. He is also the project lead of The DataScapes Project, an initiative that explores how Augmented Reality can be used as a medium for landscape art.

Notes

-

[1]

To be sure, the field of digital history is not characterized solely or predominantly by the issue of design, and the latest resurgence of computationally-supported history is generating an increasingly voluminous literature. The most recent published anthology surveying the field is Toni Weller’s, ed., History in the Digital Age (New York: Routledge, 2013). Two online sites also offer excellent and relatively recent surveys of the field, starting with the Roy Rosenzweig Center for History and New Media: see “Essays on History and New Media” at http://chnm.gmu.edu/essays-on-history-new-media/essays/ <viewed 20 January 2015>; The Digital History Project situated at the University of Nebraska-Lincoln offers a stimulating array of essays, interviews, and lectures describing the state of the field over the past ten years: see http://digitalhistory.unl.edu/essays.php <viewed 20 January2015>. Finally, for a survey of work specific to Canada, see John Bonnett and Kevin Kee, “Transitions: A Prologue and Preview of Digital Humanities Research in Canada,” Digital Studies 1, no. 2 (2009), available online at: http://www.digitalstudies.org/ojs/index.php/digital_studies/article/view/167/222 <viewed January 2015>.

One important trend that is gaining increased traction in digital history is the field of historical GIS (Geographic Information Systems) which uses GIS software to locate spatial patterns in attribute data. See especially Anne Kelly Knowles, ed. Placing History: How Maps, Spatial Data and GIS are Changing Historical Scholarship (Redlands, CA: ESRI Press, 2008); and Jennifer Bonnell and Marcel Fortin, eds., Historical GIS Research in Canada (Calgary: University of Calgary Press, 2014). Traditionally, digital historians have used computers to treat quantitative data, but scholars — historians and historically-minded literary researchers — have started to use computer supported text analysis to support research, particularly in their explorations of Big Data. Recent contributions here include Ian Milligan’s “Mining the Internet Graveyard: Rethinking the Historians’ Toolkit,” Journal of the Canadian Historical Association 23, no. 2 (2012): 21–64; Shawn Graham, Ian Milligan, and Scott Weingart, Exploring Big Historical Data: The Historian’s Macroscope (London: Imperial College Press, 2015). Franco Moretti has done much to promote text analysis through computationally-supported reading analysis of large corpora, or “distant reading.” See Franco Moretti, Distant Reading (New York: Verso, 2013). Finally, see Patrick Manning, Big Data in History (New York: Palgrave MacMillan, 2013). For a programmatic call for historians to explore Big Data, see chapter 5 of Jo Guldi and David Armitage, The History Manifesto (please complete the citation information). Scholars in the field of oral history have also drawn on computing to assist their research, and recent efforts here include those of Douglas A. Boyd and Mary A. Larson, eds., Oral History and Digital Humanities: Voice, Access, and Engagement (New York: Palgrave MacMillan, 2014); Steve High, director of the Centre for Oral History Digital Storytelling, has been a leader in the field and has developed software to support the acquisition, storage, and analysis of interview data: see http://storytelling.concordia.ca/ <viewed 20 January 2015>. A number of historians have recently started exploring the use of computing to support pedagogy. See multiple contributions on this score in Kevin Kee, ed. Pastplay: Teaching and Learning History with Technology (Ann Arbor: University of Michigan Press, 2014). For an exploration of how 3D modeling can be used as a basis for historical pedagogy, see John Bonnett, “Following in Rabelais’ Footsteps: Immersive History and the 3D Virtual Buildings Project,” in History and Computing 13, no. 2 (2001): 107–50, available online: http://hdl.handle.net/2027/spo.3310410.0006.202 <viewed 27 August 2014>.

-

[2]

Ian Watson’s The Universal Machine offers an accessible survey of the history of digital computation and its consequent effects. See Ian Watson, From the Dawn of Computing to Digital Consciousness (Berlin: Springer-Verlag, 2012). Also see Walter Isaacson, The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution (New York: Simon and Schuster, 2014).

-

[3]

Here I am referring to tools such as Trimble SketchUp, a 3D modeling package which university faculty and students can procure for minimal or no cost (see http://www.sketchup.com). Other freely available tools include NetLogo, an agent-based modeling tool that can be used to create simulations of historic systems, and Blender, an open-source game engine that can be used to create animations in 3D/4D virtual worlds (see https://ccl.northwestern.edu/netlogo/ and http://www.blender.org).

-

[4]

The insight that humanists have a role to play in the design of content dissemination platforms is now new. Scholars collaborated with printers such as Johannes Gutenberg in the design of the print book, bring or inventing design solutions to support formatting, and other important tasks including content searching. See Walter Ong, Method and the Decay of Dialogue (Chicago: University of Chicago Press, 2005); and Elizabeth L. Eisenstein, The Printing Press as an Agent of Change: Communications and Cultural Transformations in Early-Modern Europe, Volumes I and II (Cambridge UK: Cambridge University Press, 1979).

-

[5]

This insight has become an increasingly central concern in the digital humanities. See, for example, Stephen Ramsay and Geoffrey Rockwell, “Developing Things: Notes Towards an Epistemology of Building in the Digital Humanities,” in Debates in the Digital Humanities, ed. Matthew K. Gold (Minneapolis: University of Minnesota Press, 2012): 75–84; Stan Ruecker and the INKE Research Group, “Academic Prototyping as a Method of Knowledge Production: The Case of the Dynamic Table of Contexts,” Scholarly and Research Communication 5.2 (2014); P. Michura, M. Radzikowska, and the INKE Research Group, “Seeing the Forest and Its Trees. A Hybrid Visual Research Tool for Exploring Look and Feel in Interface Design,” in Proceedings of the International Congress of International Association of Societies of Design Research (IASDR) edited by K. Sugiyama (Tokyo: Shibaura Institute of Technology, 26–30 August 2013); and Stan Ruecker, Stéfan Sinclair, and Milena Radzikowska, Visual Interface Design for Digital Cultural Heritage (Farnham, UK: Ashgate Publishing, 2011).

-

[6]

Chad Gaffield, for example, has devoted much of his career first as a historian and then, as SSHRC president, to considering the implications that computation present for the discipline of history. See for example Chad Gaffield, “Managing an Archival Golden Age in the Changing World of Digital Scholarship,” Archivaria 78 (Fall 2014): 179–91.

-

[7]

For a previous contribution making this case, see John Bonnett, “Charting a New Aesthetics,” Histoire sociale – Social History 40, no. 79 (May 2007): 169–208.

-

[8]

The J.B. Tyrell Medal is awarded by the Royal Society of Canada for outstanding contributions to the field of Canadian history. It is awarded biennially.

-

[9]

One common complaint regarding NHST is that it is based on the logical fallacy of the excluded middle. NHST uses measurement to exclude the Null Hypothesis, the proposition that the relationship between a given cause and a given effect is random, and inconsequential. If through NHST a researcher is able to establish that the Null does not apply, it is deemed to empirically establish that the researcher’s proffered hypothesis does apply. Critics have rightly noted NHST fails to establish that a third, different cause might be at play. There are other criticisms of NHST that have also been forwarded. See Stephen Thomas Ziliak and Deirdre N. McCloskey, The Cult of Statistical Significance: How the Standard Error Costs Us Jobs, Justice, and Lives (Ann Arbor, MI: The University of Michigan Press, 2008); Jacob Cohen, “The earth is round (p<0.05),” American Psychologist 49, no. 12 (1994): 997–1003.; Gerd Gigerenzer, “Mindless Statistics,” Journal of Socio-Economics 33 (2004): 587–606.

-

[10]

See chapters one and four of M. Mitchell Waldrop, Complexity: The Emerging Science at the Edge of Order and Chaos (New York: Simon and Schuster, 1992).

-

[11]

This argument was most fully explicated in two works: see Harold Innis, The Fur Trade: An Introduction to Canadian Economic History, revised edition (Toronto: University of Toronto Press, 1956, c. 1930); and Harold Innis, The Cod Fisheries: The History of an International Economy (Toronto: The Ryerson Press, 1940).

-

[12]

See Harold Innis, Political Economy in the Modern State (Toronto: The Ryerson Press, 1946); Harold Innis, Empire and Communications (Oxford: The Clarendon Press, 1950); and Harold Innis, The Bias of Communication (Toronto: University of Toronto Press, 1951).

-

[13]

John Bonnett, Emergence and Empire: Innis, Complexity and the Trajectory of History (Montréal and Kingston: McGill-Queen’s University Press, 2013), 243–8.

-

[14]

Bonnett, Emergence, 17–19; Waldrop, Complexity, 44–6.

-

[15]

Harold Innis, “The Newspaper in Economic Development,” Political Economy in the Modern State (Toronto: The Ryerson Press, 1946), 34; Harold Innis to Arthur Cole, 21 October 1941, Harold Innis Papers, University of Toronto Archives, B72-0025, Box 11, File 1.

-

[16]

For introductions to the field of historical GIS, see Amy Hillier and Anne Kelly Knowles, eds. Placing History: How Maps, Spatial Data, and GIS Are Changing Historical Scholarship (New York: ESRI Press, 2008); Ian N. Gregory and Paul S. Ell, Historical GIS: Technologies, Methodologies, and Scholarship, Cambridge Studies in Historical Geography (Cambridge, UK: Cambridge University Press, 2007).

-

[17]

Harold Innis to Arthur Cole, 31 October1941, Harold Innis Papers, University of Toronto Archives, B72-0025, Box 11, File 1.

-

[18]

Harold Innis, “On the Economic Significance of Cultural Factors,” Political Economy in the Modern State (Toronto: The Ryerson Press, 1946), 99–100.

-

[19]

Innis, “Cultural Factors,” 100.

-

[20]

Harold Innis, “Chapter 6: Printing in the Sixteenth Century,” in “History of Communication,” Harold Innis Papers, University of Toronto Archives, B1972-003, Box 17, pp. 35–6, 38. The original source of Innis’ quote is Francis Bacon, De Augmentis Scientarium, Book 5, Chapter 5.

-

[21]

Bonnett, Emergence, 191–8.

-

[22]

See Harold Innis, “A Plea for Time,” in The Bias of Communication (Toronto: The University of Toronto Press, 1951), 61–91.

-

[23]

Gregory and Ell, Historical GIS, 119-44. More recently, Michael Goodchild notes that the “sessions on space-time integration organized at the annual meetings of the Association of American Geographers in 2011 provided ample demonstration of the importance of a space-time perspective, but did little to advance the notion of a modeling environment that might qualify as an STGIS (Space-Time Geographic Information System).” He further notes that it has “proven very difficult to move beyond the metaphor of the map as the conceptual framework for GIS. Today a GIS is still often presented as a computerized container of maps … One casualty of the persistent map metaphor has been time, as maps have always favored the relatively static features of the Earth’s surface … Only recently have some of the more powerful time-dependent aspects of mapmaking begun to attract attention, in products such as the maps one can now readily obtain of local, real-time traffic conditions.” See Goodchild, “Prospects for a Space-Time GIS,” Annals of the Association of American Geographers 103, no. 5 (2013): 1073, 1076.

-

[24]

See David J. Bodenhamer, John Corrigan, and Trevor M. Harris, eds., The Spatial Humanities: GIS and the Future of Humanities Scholarship (Bloomington, Indiana: Indiana University Press, 2010). The purpose of Bodenhamer et al.’s edited collection was to highlight this problem and to suggest a construct known as The Deep Map, which might be one way to connect qualitative constructions of space with mapping. The October 2013 issue of the International Journal of Humanities and Arts Computing presents early attempts to define digital Deep Maps and establish a set of conventions and methods to support their construction. The four essays in the special section, however, suggest that this enterprise remains in its early stages. See also the forthcoming work of Bodenhamer, Corrigan, and Harris, eds, Deep Maps and Spatial Narratives (Bloomington: Indiana University Press, 2015).

Ian Gregory, at Lancaster University, is developing a different set of methods to express and analyze qualitative conceptions of space. He and his colleagues are devising methods to analyze large corpora of unstructured, historic texts in a GIS environment. See http://www.lancaster.ac.uk/fass/projects/spatialhum.wordpress/ <viewed 20January 2015>.

-

[25]

Gregory and Ell, Historical GIS, 119.

-

[26]

For a prescient and still relevant article discussing convergence, see Jon Peddie, “Digital Media Technology: Industry Trends and Developments,” IEEE Computer Graphics and Applications 21, no.1 (January 2001): 27–31. Researchers also refer to this practice as Recombinant Innovation. See chapter 5 of Erik Brynjolfsson and Andrew McAfee, The Second Machine Age (New York: W.W. Norton and Company, 2014).

-

[27]

Bonnett, Emergence, 209–17.

-

[28]

For a second, similar assessment see Douglas B. Richardson, “Real-Time Space-Time Integration in GIScience and Geography,” Annals of the Association of American Geographers 103, no. 5 (2013): 1062–71.

-

[29]

See John Bonnett, Geoffrey Rockwell, and Kyle Kuchmey, “High Performance Computing in the Arts and Humanities,” at: SHARCNET – Shared Hierarchical Academic Research Computing Network Website. Available online at: https://www.sharcnet.ca/my/research/hhpc <viewed 27 August 2014>; John Bonnett, “High–Performance Computing: An Agenda for the Social Sciences and the Humanities in Canada,” Digital Studies 1, no. 2 (2009). Available online at: http://www.digitalstudies.org/ojs/index.php/digital_studies/article/view/168/211 <viewed 27 August 2014>.

-

[30]

Jonathan Rauch, “Seeing Around Corners,” Atlantic Monthly 289, no. 4 (April 2002): 35–48; Joshua Epstein, Generative Social Science: Studies in Agent-Based Computational Modeling (Princeton,NJ.: Princeton University Press, 2006); Goodchild, “Prospects for a Space-Time GIS,” 1075.

-

[31]

See http://www.google.com/earth/ <viewed 27 August 2014>.

-

[32]

A second determining factor, particularly in the Google search engine, is the behaviour and selections of prior users interested in the same or similar topics. See Watson, The Universal Machine, 181, 186–9.

-

[33]

For an introduction to the concept of the Geo-Spatial Web, see Arno Scharl and Klaus Tochtermann, eds, The Geospatial Web: How Geobrowsers, Social Software and the Web 2.0 are Shaping the Network Society (London: Springer-Verlag, 2007).

-

[34]

The challenge of Big Data is the rationale for the Digging into Data program, a multi-country, multi-institution effort that includes SSHRC, NSERC, and CFI among its members. See: http://www.diggingintodata.org/ <viewed 27 August 2014>.

-

[35]

David J. Bodenhamer, “The Potential of Spatial Humanities,” The Spatial Humanities: GIS and the Future of Humanities Scholarship, eds. David Bodenhamer, John Corrigan, and Trevor M. Harris (Bloomington, Ind: Indiana University Press, 2010): 26–8. Also see in the same volume, Gary Lock, “Representations of Space and Place in the Humanities,” 97–101; and Trevor M. Harris, John Corrigan, and David J. Bodenhamer, “Challenges for the Spatial Humanities,” 171.

-

[36]

May Yuan and Kathleen Hornsby, Computation and Visualization for Understanding Dynamics in Geographic Domains (Boca Raton, Fla: CRC Press, 2008), 10; David A. Bennett and Wenwu Tang, “Mobile, Aware, Intelligent Agents (MAIA),” in Understanding Dynamics of Geographic Domains, eds. Kathleen Stewart Hornsby and May Yuan (Boca Raton, Fla: CRC Press, 2008), 171–86; Gary Lock, “Representations of Space and Place in the Humanities” in The Spatial Humanities, ed. Bodenhamer, 97.

-

[37]

May Yuan, “Mapping Text” in The Spatial Humanities, ed. Bodenhamer, 116–7; Trevor M. Harris, L. Jesse Rouse, and Susan Bergeron, “The Geospatial Semantic Web, Pareto GIS, and the Humanities,” ibid., 124–42.

-

[38]

Mikhail Bakhtin, Rabelais and his World, trans. Hélène Iswolsky (Cambridge, MA: MIT Press, 1968).

-

[39]

The project is a joint Canada-UK project funded by SSHRC/Plaque Tournante and Edge Hill University, Ormskirk, United Kingdom. Project participants include John Bonnett (Brock University); Léon Robichaud (Université de Sherbrooke); Mark Anderson (Edge Hill University); and Brian Farrimond (Edge Hill University).

-

[40]