Abstracts

Abstract

In the context of a quasi-experimental study on acquisition of cultural competence by trainee translators in the case of German-Spanish translation, a declarative knowledge questionnaire on German culture was designed and validated. This instrument, which consists of 30 items covering four cultural fields, was designed to gather data on the subjects’ declarative knowledge of German culture before performing a translation task. This paper presents the design and validation of the questionnaire, which was based on verification of content validity, criterion validity and face validity. Teachers of German as a second foreign language in the Bachelor’s degree in Translation and Interpreting at the Universitat Autònoma de Barcelona, students of German as a first and second foreign language in the same degree programme, and citizens of the Federal Republic of Germany participated in the validation of the instrument. This resulted in a validated questionnaire that may be useful for other researchers and teachers. Despite its utility, the instrument has some limitations: it does not enable replication of the study with other samples without calibrating it, it is not stable over long periods of time and it only measures cultural knowledge at a nation-state level.

Keywords:

- cultural knowledge,

- declarative knowledge about German culture,

- instrument validation,

- translator’s cultural competence

Résumé

Dans le cadre d’une recherche quasi expérimentale sur l’acquisition de la compétence culturelle du traducteur dans la combinaison allemand-espagnol, un questionnaire de connaissances sur la culture allemande a été élaboré et validé. Cet outil, composé de 30 items provenant de quatre domaines culturels, avait pour but de collecter des données sur les connaissances déclaratives sur la culture allemande des sujets avant d’effectuer une tâche de traduction. L’objectif de cet article est de présenter la conception et la validation du questionnaire, basé sur la vérification de la validité du contenu, la validité de critère et la validité apparente. Des professeurs d’allemand comme deuxième langue étrangère de licence de traduction et d’interprétation de l’Universitat Autònoma de Barcelona, des étudiants d’allemand comme première et deuxième langue étrangère de la même licence et des citoyens de la République fédérale d’Allemagne ont participé à la validation. Le résultat obtenu a été un questionnaire validé qui peut être utile à d’autres chercheurs et enseignants. En dépit de son utilité, l’outil a certaines limitations : il ne permet pas de reproduire l’étude avec d’autres échantillons sans l’étalonner, n’est pas stable sur de longues périodes de temps et ne mesure que les connaissances culturelles au niveau État-nation.

Mots-clés :

- connaissances culturelles,

- connaissances déclaratives sur la culture allemande,

- validation des outils,

- compétence culturelle du traducteur

Resumen

En el marco de una investigación cuasiexperimental sobre la adquisición de la competencia cultural del traductor en la traducción alemán-español, se diseñó y validó un cuestionario de conocimientos declarativos sobre cultura alemana. Este instrumento se compone de 30 ítems que cubren cuatro ámbitos culturales y fue concebido para recoger datos sobre los conocimientos declarativos sobre cultura alemana de los sujetos antes de que realizaran una tarea de traducción. El objetivo de este artículo es presentar el diseño y el proceso de validación del cuestionario, que se basó en la verificación de la validez del contenido, la validez del criterio y la validez aparente. En su validación participaron profesores de alemán como segunda lengua extranjera del Grado en Traducción e Interpretación de la Universitat Autònoma de Barcelona, estudiantes de alemán como primera y como segunda lengua extranjera del mismo grado y ciudadanos de la República Federal de Alemania. Este proceso permitió obtener un cuestionario validado que puede ser de utilidad para otros investigadores y docentes de traducción. A pesar de su utilidad, el instrumento tiene ciertas limitaciones: la replicación del estudio no puede realizarse sin la calibración previa del instrumento para la nueva muestra, no es estable en largos períodos de tiempo y solamente mide conocimientos culturales a nivel estado-nación.

Palabras clave:

- conocimientos culturales,

- conocimientos declarativos sobre cultura alemana,

- validación de instrumentos,

- competencia cultural del traductor

Article body

1. Introduction

The aim of this paper is to present the design and validation process of a declarative knowledge questionnaire on German culture. This instrument was used in a quasi-experimental study on the acquisition of translator cultural competence in the case of German-Spanish translation (Olalla-Soler 2017a). It was used to measure the declarative cultural knowledge of participants in a quasi-experiment (undergraduate students in Translation and Interpreting at the Universitat Autònoma de Barcelona with German as a second foreign language and professional translators) before performing a translation task.

Cultural knowledge has been considered of great importance in both translation competence and translator (inter)cultural competence models (see Section 2) and, from a didactic point of view, contrastive approaches are favoured in teaching this knowledge, whereas approaches based on the transmission of information are widely criticised. However, the limited empirical data currently available indicate that not only do professional translators consider declarative cultural knowledge essential for their profession (Gutiérrez Bregón 2016), but that possessing cultural knowledge and being able to contrast it are two different aspects (Olk 2009; Bahumaid 2010; Olalla-Soler 2019). Thus, this would suggest that cultural knowledge is acquired in two phases: an initial, declarative phase and a second contrastive phase. Despite the emphasis placed on this type of knowledge both in competence models and in translator training, hardly any instruments exist to measure it in empirical research and to assess it in translator training. The questionnaire presented in this paper was designed to be employed in empirical research, although it can also be used for training purposes.

The declarative knowledge questionnaire on German culture consists of 30 items covering four cultural fields: organisation of the natural environment, organisation of the cultural patrimony, organisation of the society, and models of behaviour, values and ideas. The questionnaire contains multiple-choice items with three to four options and a single correct answer. They were designed using several documentation sources, such as the Einbürgerungstest (Bundesamt für Migration und Flüchtlinge 2012)[1] – the Federal Republic of Germany’s (FRG) naturalisation test, which must be passed in order to qualify for citizenship – and the results of Schroll-Machl’s (2002) study on the central cultural standards of the inhabitants of the FRG.

Validation consisted of three phases, each focusing on a specific aspect: content validity, criterion validity and face validity. Teachers of German as a second foreign language in the Bachelor’s degree in Translation and Interpreting at UAB, students of German as a first and second foreign language in the same degree, and German citizens of various ages participated in the validation process.

This paper is organised as follows. First, the conceptual framework is presented. It focuses on the way in which cultural knowledge is addressed in translation competence and translator (inter)cultural competence models as well as in translator training. Second, the design procedure for the questionnaire is explained. Third, the three validation phases are described: content validity, criterion validity and face validity. Finally, we draw some conclusions on the design and validation processes and also discuss the limitations of the instrument.

2. The importance of cultural knowledge in translator training

2.1. Cultural knowledge, translation competence, and translator (inter)cultural competence

Since the beginning of research on translation competence, cultural knowledge has been given great importance and has been included in most of the existing translation competence models. Some of them include this type of knowledge in the cultural or intercultural sub-competence (Nord 1988; Hansen 1997; Kelly 2002, 2005; EMT Expert Group;[2] CIUTI[3]), while other scholars place it in the extra-linguistic (sub)competence (Hurtado Albir 1996; PACTE 2003; Katan 2008) or in other (sub)competences such as the socio-linguistic sub-competence in Bell’s model (1991). Other scholars only refer to cultural knowledge as part of translation competence without embedding it in a specific (sub)competence (Kiraly 1995; Cao 1996; Neubert 2000; Shreve 2006).

Specific models of translator (inter)cultural competence also incorporate cultural knowledge. Witte (2000; 2005) defines translator cultural competence as a translator’s ability to compare cultural phenomena of a foreign culture with one’s own culture and to then translate them into the target culture in a manner appropriate to the translation brief while maintaining communicative interaction. In order to carry out this process, the translator needs two skills: competence-in-cultures and competence-between-cultures. Competence-in-cultures is where cultural knowledge is located, and it encompasses perceptual tendencies and behavioural patterns, attitudes, values, etc. related to the working cultures. Bahumaid’s model of translator cultural competence (2010) comprises two types of encyclopaedic knowledge of the source and target culture: knowledge of different aspects of history, geography, politics, economics, society, culture, education, justice, administration, etc., and knowledge related to the countries of the source and target languages. PICT’s model of translator intercultural competence (2012)[4] makes no explicit mention of cultural knowledge, but it includes key concepts of intercultural communication theory in the theoretical dimension of this competence. Yarosh (2012) includes cultural knowledge, understood as knowledge about the differences and similarities between the cultures with which the translator tends to work in terms of attitudes, knowledge and beliefs, modes of behaviour, and their appropriateness in different contexts, in a comparative cultural knowledge competence. Olalla-Soler (2017a) includes a cultural knowledge sub-competence in his model of translator cultural competence. This sub-competence includes knowledge of the cultures involved in the translation process, and this knowledge is regarded as essential for identifying cultural references in the source text and interpreting them in accordance with the systems of the source and target cultures.

2.2. Teaching cultural knowledge in translator training

Given the importance of cultural knowledge in translation competence and translator (inter)cultural competence models, this type of knowledge has been the centre of attention in a substantial portion of the debates on the teaching of cultural aspects in translator training (Berenguer 1998; Katan 1999; Witte 2000, 2008; Kelly 2002; Clouet 2008; Yarosh and Muies 2011).

It is widely agreed that teaching cultural knowledge should not be restricted to transmission of facts and intercultural differences since this content is mainly geared to memorisation of declarative knowledge on issues such as history, literature, political institutions, etc. (Katan 1999; Witte 2000; Yarosh and Muies 2011). Thus, many authors emphasise that teaching such knowledge requires a contrastive approach (Berenguer 1998; Witte 2000; Clouet 2008; Katan 2009; Yarosh and Muies 2011). Kelly (2005) and Kelly and Soriano (2007) also praise the use of approaches based on immersive experiences in the working cultures by means of mobility programmes, exchanges or meetings with foreign students.

2.3. A two-phase acquisition of cultural knowledge in translator training

Findings such as those obtained in the studies conducted by Olk (2009), Bahumaid (2010), Gutiérrez Bregón (2016), and Olalla-Soler (2019) suggest that possessing declarative cultural knowledge not only leads to better resolution of cultural translation problems but is also considered very valuable by professional translators, as indicated by the translators who participated in Gutiérrez Bregón’s study (2016). These translators stressed the need for knowledge on topics such as history, geography, beliefs, customs, institutions, literature, gastronomy, music, art, politics, values, legislation, and the socio-economic context of both the source and target cultures. Olk (2009) conducted an empirical study to investigate the shortcomings and cultural translation problems encountered when a sample of 19 last-year students of German Philology at a British university translated a newspaper article from English into German. Olk observed that many of the incorrect solutions provided by the students were caused by a lack of knowledge about the source culture. Bahumaid (2010) conducted an empirical study with ten postgraduate students of English-Arabic translation from two universities in the United Arab Emirates to evaluate the level of cultural competence of English-Arabic translation students in postgraduate programmes at Arab universities. Bahumaid found that the students had major deficiencies in their knowledge of both source and target cultures, which led to inadequate translation solutions. Olalla-Soler (2017a) conducted a quasi-experimental study with independent samples from students from the four years of the Bachelor’s degree in Translation and Interpreting at the Universitat Autònoma de Barcelona with German as a second foreign language and Spanish as their mother tongue. He observed that when students possessed cultural knowledge of the source culture they were unable to put it into practice when solving cultural translation problems, thus producing low-quality solutions, while the professional translators who participated in the quasi-experiment were able to put their knowledge into practice and their solutions were higher in quality than those of the students.

The results of the aforementioned empirical studies suggest that possession of cultural knowledge, its acquisition and being able to contrast it are different elements, as is reflected in the author’s model of translator cultural competence (Olalla-Soler 2017a), which distinguishes cultural knowledge, the skills required to acquire this knowledge, and the ability to contrast cultural knowledge of the working cultures regarding a perceived cultural phenomenon. These findings favour the viewpoints of those scholars who advocate for the co-existence of teaching methods that are focused on transmission of declarative cultural knowledge with approaches that are geared towards developing contrastive cultural skills.

2.4. Measuring cultural knowledge

Despite the importance given to cultural knowledge in both translation competence and translator (inter)cultural competence models and the (limited) evidence of the existence of two phases related to the acquisition of cultural knowledge (an initial declarative phase and a second contrastive phase), there are few tools available to measure the declarative phase for both didactic and research purposes. Hurtado Albir and Olalla-Soler (2016) proposed a series of tasks to assess the acquisition of cultural knowledge in the translation classroom: text analysis, commented translations, reports on cultural references, cultural knowledge questionnaires, and cultural portfolios. As for research purposes, it should be mentioned that Bahumaid (2010) did not measure the subjects’ cultural knowledge despite having attributed translation errors to a lack of knowledge of the source culture. Olk (2003; 2009) used think-aloud protocols and established six types of actions related to a lack of cultural knowledge that the students performed when translating (Olk 2003: 168-169): 1) a student states at any point during the translation that he or she is uncertain about the meaning of a cultural reference or any aspect of its meaning relevant to its translation, or reports any such problem during retrospection; 2) a student uses a cultural dictionary to establish or confirm a meaning hypothesis; 3) a student states an incorrect meaning hypothesis, but corrects the incorrect hypothesis during translation; 4) a student forms an incorrect meaning hypothesis during the think-aloud, which he or she does not correct during translation or reveals an incorrect meaning hypothesis during retrospection; 5) a student encounters a translation problem in relation to a cultural reference, or chooses an inappropriate solution for a cultural reference, but does not appear to be aware of the item’s culture-specific nature; 6) a student’s written translation shows a clear misunderstanding of a cultural reference, even though no knowledge problem was reported.

Three tasks were designed to measure the declarative cultural knowledge possessed by the subjects for the author’s quasi-experiment (Olalla-Soler 2017a). This knowledge was not acquired ad-hoc through documentation sources. These three tasks were: characterising the culturemes in a text, explaining the meaning of some of these culturemes, and filling in a declarative knowledge questionnaire on German culture. The results of these tasks can be found in Olalla-Soler (2019).

In this paper, we discuss the design and validation process of the declarative knowledge questionnaire on German culture. This tool can be both used for empirical research and for assessing the acquisition of cultural knowledge. The design and validation process that we describe in the following sections may also assist other researchers in developing their own declarative knowledge questionnaires.

3. Designing a declarative knowledge questionnaire on German culture

The main objective of this questionnaire was to collect data on the declarative knowledge of German culture possessed by the subjects who participated in a quasi-experiment on acquisition of translator cultural competence in the case of German-Spanish translation (Olalla-Soler 2017a). These data corresponded to one of the three indicators that were used to measure the knowledge of the source culture that the subjects possessed before translating a text containing cultural translation problems. Thirty-eight students of German as a second foreign language from the four years of the Bachelor’s degree in Translation and Interpreting at UAB and 10 professional translators with more than 10 years of experience participated in the study.

The data on cultural knowledge of German culture collected from this questionnaire had to reflect the overall level of knowledge possessed by the subjects. Therefore, the items to be included in the questionnaire had to cover cultural topics that were neither too precise nor too obvious.

A second major consideration in the design and validation process was to adapt the questionnaire to the group that had to complete it: students of German as a second foreign language. Given that there is no specific course on cultural aspects for second languages in the Bachelor’s degree in Translation and Interpreting at UAB, cultural aspects are covered either in the language classroom or in the translation classroom, and therefore cultural knowledge acquisition does not occur as markedly as in the case of German as a first foreign language, where the curriculum includes a compulsory course on cultural aspects.

Three phases were required in the design of the instrument. First, the cultural fields to be covered by the questionnaire were defined. Second, an initial set of items was formulated. Third, changes were introduced based on the data obtained in the validation process.

3.1. Defining the cultural fields

The questionnaire was intended to collect data on general cultural issues and thus provide an overall picture of the student subjects’ knowledge. However, the time that the subjects could devote to completing the questionnaire was limited and, therefore, the greatest possible variety of topics had to be covered in the fewest possible number of items. Moreover, declarative knowledge relating to a culture is inexhaustible and countless items may be formulated. Thus, it may occur that subjects will not answer the specific items of the questionnaire correctly, yet still have a very broad knowledge of other topics that were not included.

We used the cultural fields that Molina (2001: 92-94) defined to classify culturemes to generate items corresponding to different topics and fields:

Organisation of the natural environment. Knowledge related to flora, fauna, atmospheric phenomena, landscapes, place names, etc.

Organisation of the cultural patrimony. Knowledge related to historical and fictional characters, historical facts, religions, festivities, popular beliefs, folklore, monuments, fine arts, typical games, tools, objects, agricultural and livestock techniques, urbanism, military strategies, means of transport, etc.

Organisation of the society. Knowledge related to conventions and social customs (forms of treatment and courtesy, ways of dressing, gestures, greetings, etc.) and social organisation (the legal, educational and political systems, units of measure, calendars, etc.).

Organisation of language and communication needs. Knowledge related to proverbs, accepted metaphors, interjections, blasphemies, transliterations, etc.

Knowledge of behaviour models, values and ideas. This field was not included in Molina’s proposal and was added by Olalla-Soler (2017a).

3.2. Drafting the first set of items

After defining the cultural fields that the questionnaire would include, we began formulating the items that would constitute the first set.

We used three resources to gather ideas and to elaborate the items. The first one was the Einbürgerungstest (Bundesamt für Migration und Flüchtlinge 2012), the Federal Republic of Germany’s naturalisation test, which must be passed to be eligible for citizenship. This questionnaire was designed at Humboldt University in Berlin and measures the level of declarative knowledge about various aspects of the country: history, geography, institutions, legal issues, rights and obligations, religion, social habits, national symbols, political system, constitutional aspects, etc.

This test has been used in all Länder of the FRG since 2008. It consists of a set of 310 items, 33 of which are randomly selected for each test. The items are multiple choice questions with four possible options and a single correct answer.

The second resource was the book Duden Allgemeinbildung (2010), [5] published by Duden. It is a self-learning book which includes 1000 questions about the FRG on issues such as flora, fauna, history, geography, language, culture, etc. As in the Einbürgerungstest, the questions are multiple-choice, with four possible options and a single correct answer.

The third resource was the book Die Deutschen (2002),[6] by Sylvia Schroll-Machl. This book presents a study on the central cultural standards of the inhabitants of the FRG, specifically in the work environment, although in some cases the author generalises this aspect to other areas, but at the same time she questions the generalisability of certain standards to the entire German population. The standards that Shcroll-Machl identified were: objectivity, appreciation for norms and structures, internalised and norm-oriented control, time planning, adaptation of personality traits to different environments, clear and direct communicative style, and individualism (Schroll-Machl 2002: 34). These seven standards were used to formulate items related to the knowledge of behaviour models, values and ideas.

After reviewing these three resources, we drafted many items for the four cultural fields. The drafting process was guided by three principles: the items should be multiple-choice, they should have three or four possible options with only one correct answer, and they should be clearly and precisely worded.

The first version of the questionnaire consisted of 37 items. Two items that did not comply with the established principles were discarded. The questionnaire then comprised 35 items: 13 related to the organisation of the cultural patrimony, 3 related to linguistic and communicative needs, 5 related to the natural environment and 14 to the organisation of the society. In this first version, items related to behaviour models, values and ideas were not included.

Once this version of the questionnaire was completed, it was validated. In each of the validation phases, some items were deleted and modified, and new ones were drafted.

4. Validating the Questionnaire

We could not use common validation procedures, such as principal component analysis and internal consistency analysis (DeVellis 1991; Litwin 1995; Muñiz, Fidalgo, et al. 2005), to validate this questionnaire since these procedures are not applicable to multiple-choice items (for example, Cronbach’s alpha is applicable to scale responses, Kuder-Richardson 20 to dichotomous responses and Kuder-Richardson 21 to dichotomous responses when all items have a similar difficulty). Additionally, our questionnaire included different fields with items that measure different cultural aspects in each of them, so they cannot be considered constructs. The items have different levels of difficulty, which also complicates the analysis using normal procedures. For these reasons, to validate this instrument, we developed our own validation method based on different validity types: content validity, criterion validity and face validity (DeVellis 1991; Litwin 1995; Muñiz, Fidalgo, et al. 2005).

In this section we present the procedures we followed in each of the three validation phases, as well as the participants who participated in each phase and the results obtained.

4.1. Phase one: content validity

DeVellis (1991: 43) defines the concept of content validity as follows: “[c]ontent validity concerns item sampling adequacy – that is, the extent to which a specific set of items reflects a content domain.”

We considered content validity to be the inclusion of items of various levels of difficulty for all four cultural fields of the questionnaire, as well as identification of the items that are not properly formulated or that fall outside of the scope of the questionnaire. To that end, the first version of the 35-item questionnaire was subject to expert judgement.

Seven teachers of German as a second foreign language in the Bachelor’s degree in Translation and Interpreting at UAB were invited to participate as experts. The aim of the expert judgement was to evaluate the level of difficulty of the questionnaire’s items in order to select those that were in line with the cultural content dealt with in German as a second foreign language courses.

4.1.1. Procedure

We developed an online version of the first draft of the questionnaire which contained 35 items, and a semantic differential was added to each item. The scale ranged from 1 (easy) to 6 (difficult). Using these scales, experts assessed the level of difficulty for each item according to the level of difficulty that they thought their students would have if the students were to attempt to answer that specific item (Figure 1).

Figure 1

Example of an item and the semantic differential used by the experts in their judgement

Experts were also able to comment on the questionnaire in general or on specific items.

After the data were collected, the mean difficulty of each item was calculated. The items were then classified into three groups according to their level of difficulty: low difficulty (means 1 to 2.66), medium difficulty (means 2.67 to 4.33) and high difficulty (means 4.34 to 6). Items whose mean was too low (means from 1 to 1.83) or too high (means from 5.17 to 6) were eliminated, and an attempt was made to maintain a balance between the number of items with low, medium and high difficulty. The experts’ comments were also taken into consideration when selecting items. An example of the analysis performed for each item is shown in Table 1.

Table 1

Example of item analysis after expert judgement

The means from 1 to 6 were then transformed into a scale from 0 to 1. This new scale was used in subsequent calculations. The mean of each item was called the error probability index. It ranges from 0 to 1. The closer to 1, the more difficult it is to respond correctly to a given item. The probability index of correct answer was calculated from the error probability index. This new indicator also ranges from 0 to 1. The closer it is to 1, the more likely it is that the item will be answered correctly. This indicator was calculated by subtracting the error probability index values from 1 for each item and indicates the estimated probability of being correct in each question.

4.1.2. Participants

In the Bachelor’s degree in Translation and Interpreting at UAB, there are eight courses related to German as a second foreign language. The questionnaire was sent to the teachers responsible for each of the eight courses to monitor the level of difficulty throughout the training since they had to mark the difficulty of the items according to the difficulty they might pose for the students in their course. One teacher was responsible for two courses and he/she answered the questionnaire while considering the most advanced level of both. Data were obtained from the following participants (Table 2).

Table 2

Sample used to measure content validity of the declarative knowledge questionnaire on German culture

As data were collected from the four years of the degree, it was possible to obtain an overview of the evolution of the level of difficulty from year to year.

4.1.3. Results

The probability index of correct answer was used to observe the distribution of the items’ estimated success rate (Figure 2).

Figure 2

Histogram of the probability index of correct answer

A bimodal trend was evident in the distribution of the index: there were two peaks situated between the probabilities ranging from 0.10 to 0.40 (items with a medium-low probability of being correct) and from 0.50 to 0.70 (items with a medium-high probability of being correct). However, there were few items whose probability lay between 0.40 and 0.50 (items with a medium probability of being correct). The distribution did not reach the edges (0) and (1) of the range, indicating that there were no extreme values. As a result of this analysis, the following decisions were made: 1) the number of items with a low or high probability of being correct should be reduced; 2) the number of items with a medium probability of being correct should increase; and, in relation to the previous two decisions, 3) the distribution of the probability index of correct answer should be normally distributed, even if the kurtosis was rather platykurtic.

Based on this analysis, we also identified which items should be deleted and which should be modified: six were deleted and five were modified. Deleted items were replaced by new ones, which underwent the same procedure to estimate their probability of being correct. The cultural field of linguistic and communication needs was eliminated as this was already addressed in the translation of the text and was replaced by the field of knowledge of behaviour models, values and ideas, which had not been included initially. At the end of the first validation phase, the test had a total of 35 items.

4.2. Phase two: criterion validity

Rossi, Wright, et al. (1983: 97) define criterion validity as “the correlation between a measure and some criterion variable of interest.” Criterion validity assesses whether the measurement of the questionnaire reflects the true level of knowledge of the subjects. To assess criterion validity, the questionnaire was put to a test in the pilot study of the quasi-experiment on the acquisition of translator cultural competence (Olalla-Soler 2017b).

The aim of the pilot study was not only to test the declarative knowledge questionnaire on German culture, but also to check all other instruments, the sample selection criteria, the methodological design of the study and the experimental tasks. The objectives of this validation phase were: 1) to determine whether the questionnaire collected the data for which it was designed, and 2) to determine whether the relationship between the level of difficulty of the questionnaire and the number of correct responses from subjects was consistent.

4.2.1. Procedure

We calculated the subjects’ score obtained in the declarative knowledge questionnaire on German culture by assigning a value of 1 for each correct answer and a value of 0 for each incorrect answer. The mean for each cultural field was then calculated and the mean for the four fields was computed.

To assess the internal consistency of the questionnaire, the mean of correct answers per item was calculated. The mean values obtained for each item were called the indexes of correct answer, which range from 0 to 1. The closer to one, the more often the item was answered correctly. This indicator represents the observed value of correct responses for each item (Table 3).

Table 3

Calculation example of the index of correct answer of the subjects

The next step was to subtract the probability index of correct answer from the index of correct answer. The value obtained was the consistency coefficient for each item. This value ranges from -1 (negative consistency: the probability of responding correctly is high, but a low number of correct answers is obtained) to 1 (positive consistency: the probability of responding correctly is low, but a high number of correct answers is obtained). The closer the value is to zero, the greater the consistency between the probability of responding correctly and the number of correct answers (Table 4).

Table 4

Calculation example of the consistency coefficient for each item

The mean of the consistency coefficient for all items was calculated for each of the subjects to obtain their overall coefficient.

Subsequently, we checked whether the index of correct answer and the probability index of correct answer were normally distributed using the Shapiro-Wilk test and Q-Q graphs. With both being normally distributed, the strength of association between the two indicators was measured with Pearson’s correlation.

4.2.2. Participants

To assess criterion validity, two samples were drawn from two population groups. The first sample consisted of five students from the course Language and Translation C6 (fourth year, second semester). These students participated in the pilot study of the quasi-experiment. The second sample was made up of twelve students from the subject Basic Cultural Mediation in Translation and Interpreting from German as a first foreign language (third year, first semester). These students only completed the declarative knowledge questionnaire on German culture.

Although the two samples were drawn from different courses, the language levels were similar.[7] This enabled us to isolate language level as an extraneous variable and we thus attributed the possible differences between the two samples to different levels of cultural knowledge.

All the indexes presented in section 4.2.1 (except for the probability index of correct answer, which is derived from the teachers’ assessments) were calculated for the two sample groups separately.

4.2.3. Results

We first present the results for the index of correct answer for both sample groups. We then provide the results for the consistency coefficient between the probability index of correct answer and the index of correct answer.

4.2.3.1. Index of correct answer

Both groups obtained similar means regarding the index of correct answer (Table 5). The standard deviation was also similar. The Student’s t-test for equal variances[8] revealed that there was no statistically significant difference between the two samples: t(63) = 0.45; p = 0.65; 95% CI = -0.12, 0.19; Hedges’ g = 0.10.

Table 5

Mean and standard deviation of the index of correct answer by sample group

Nevertheless, when comparing the distribution of the index of correct answer for each item in both groups, some differences were observed (Figure 3).

The distribution of the index of correct answer in the case of students of German as a second foreign language was slightly closer to a normal distribution, although the curve was leptokurtic and slightly asymmetrical. The distribution of this index in the case of students of German as a first foreign language was more oscillating and the values did not lie within any specific range. This indicated that, although in the case of students of German as a second foreign language there was a trend towards a medium index of correct answer (the mode of the index of correct answer of the items was not too low or too high), in the case of students of German as a first foreign language, a decreasing tendency existed: there were many items with a low frequency of correct answer, and the frequency decreased as the index of correct answer increased. Thus, the difference in the distributions suggested that the questionnaire was, to some extent, adjusted to the group of students of German as a second foreign language. This does not necessarily imply that the German as a first foreign language group performed worse than of German as a second foreign language group due to a lack of cultural knowledge (the mean difference between the two groups is 0.04 and it is not statistically significant). This result indicates that the items on the questionnaire were selected according to the perceived difficulty as judged by the teachers of German as a second foreign language and that their perception does not correspond to the index of correct answer for the group of students of German as a first foreign language. If teachers of German as a first foreign language had been responsible for assessing the level of difficulty for each item according to the difficulty that they thought their students of German as a first foreign language would have if they were to attempt to answer that specific item, the consistency between the probability index of correct answer and the index of correct answer would possibly be higher in the case of students of German as a first foreign language than in the case of students of German as a second foreign language. The relationship between these two indicators is explored in the following paragraph.

Figure 3

Distribution of the index of correct answer by sample group

4.2.3.2. Consistency coefficient between the probability index of correct answer and the index of correct answer

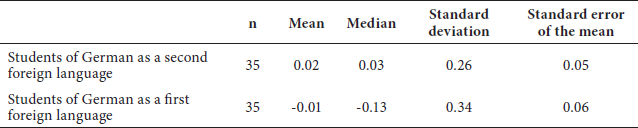

Table 6 shows the results of the consistency coefficient obtained by each sample group.

Table 6

Descriptive statistics of the consistency coefficient between the probability index of correct answer and the index of correct answer for each sample group

Considering the mean as the main statistic of central tendency, we observed that there was not a considerable difference in the consistency coefficient of the students of German as a second foreign language and that of the students of German as a first foreign language (difference of 0.03 points). The Student’s t-test results for equal variances[9] also indicated that there was no difference between both groups: t(63) = 0.44; p = 0.66; 95% CI = -0.12, 0.19; Hedges’ g = 0.09. However, the mean was not an adequate statistical measure to evaluate the central tendency of this indicator, since, as the values were distributed on a scale of -1 to 1, the mean for all values tended to 0 and neutralised the distances between both range limits. Thus, it became necessary to employ a more robust central tendency statistic, such as the median. Bearing this in mind, we did observe some differences. While the median of the group of students of German as a second foreign language is close to the mean (0.03), in the case of students of German as a first foreign language the median differs considerably (-0.13). The median of the consistency coefficient of the group of students of German as a first foreign language is 0.16 points below that of students of German as a second foreign language, indicating that there is a deviation from absolute consistency (0.00). On the other hand, the coefficient in the case of students of German as a second foreign language is very close to 0 (0.03 points).

To further analyse the deviation of the group of students of German as a first foreign language, we used Pearson’s correlation to observe the strength of the association between the index of correct answer and the probability index of correct answer for both groups (Table 7).[10]

Table 7

Pearson’s correlation between the index of correct answer and the probability index of correct answer for each sample group

In the case of the students of German as a second foreign language, the correlation was moderate (0.545) and the two indexes were found to be significantly correlated (p = 0.002). In comparison, in the case of the students of German as a first foreign language, Pearson’s correlation indicated that the two indexes were not correlated (-0.007) (Figure 4).

The rate of correct answers in the group of students of German as a second foreign language (that is, the values observed from the subjects) is correlated with the probability estimation of correct answers (that is, the probability of being correct according to the experts’ assessment). The questionnaire was thus adjusted to the students of German as a second foreign language, as their correct response rate was largely in line with the experts’ expectations, but not to the students of German as a first foreign language: while this group performed similarly on the questionnaire, their correct response rate differed from the experts’ expectations for the students of German as a second foreign language.

Figure 4

Scatter plot with regression lines for the index of correct answer and the probability index of correct answer by sample group

4.3. Phase three: face validity

Babbie (1990: 113-134) describes face validity as follows: “[p]articular empirical measures may or may not jibe with our common agreements and our individual mental images associated with a particular concept.”

The aim of this phase was to verify that all items were part of German citizens’ common knowledge of their own culture. With this procedure we identified several items that required modification as they were not precisely worded and some items were removed as they were not part of the common knowledge of German citizens.

4.3.1. Procedure and participants

The questionnaire was translated into German and handed out to six German participants: three of them were about to take the university entry exam, two were university students and one of them was a middle-aged participant who had completed a university education. The participants filled in the questionnaire and could add comments where necessary.

We processed the data obtained as follows. First, the correct responses were counted in each group (pre-university students, university students, and the middle-aged participant). Second, the responses were grouped into three categories: 1) when all participants responded correctly to an item, the item was considered appropriate and pertinent; 2) when none of the participants responded correctly to an item, the item was considered neither appropriate nor pertinent; and 3) when only some of the participants responded correctly to an item or when they added negative comments, it was considered that the item needed to be revised. Particular attention was devoted to the number of correct responses in the older age groups.

4.3.2. Results

Table 8 contains an example of the analysis for three items. One of them was not modified, one was edited and the third was removed.

Table 8

Example of face validity analysis for three items

The items that needed to be revised were identified, those that were deemed inappropriate were eliminated and some new items were drafted. The final version of the questionnaire contained 30 items.

5. Conclusions and final remarks

In this paper, we have presented the design and validation process of a declarative knowledge questionnaire on German culture that may be useful for both educational and research purposes. We have presented the documentation sources that were employed in its elaboration, as well as the research context from which the need to design this instrument arises. We have also provided a detailed description of the validation process to verify its face, criterion, and content validity. After the validation process, the final version of the questionnaire consists of 30 items (Appendix) distributed as follows:

Organisation of the natural environment: 4 items;

Organization of the cultural patrimony: 7 items;

Organisation of the society: 12 items;

Knowledge of behaviour models, values and ideas: 7 items.

We have presented a validated instrument that researchers or educators may use or adapt to their needs. We have also offered a design and validation process that might be useful for other researchers who may want to design a questionnaire with similar characteristics.

To conclude, we would like to mention some of the positive aspects and limitations associated with the use and design of these instruments for research purposes. We highlight the following positive aspects:

Our instrument has been subject to a meticulous validation process and one of the most remarkable results of this is that we managed to calibrate the instrument to the group for which it was intended.

We have confirmed that the instrument collects relevant data, since the results obtained from the subjects are consistent with what was expected of them

As the questionnaire has been calibrated and relevant data have been collected, we can conclude that it meets the specific needs of our study.

As regards limitations, we would like to mention that this instrument does not allow for automatic replication of the study with other samples as it must be calibrated for each sample. In addition, the questionnaire is not stable over long periods of time, since what any German citizen may consider common knowledge today may vary over time. Finally, given the research purposes for which this questionnaire was designed, the items that it contains are intended to measure cultural knowledge at a nation-state level. However, this instrument may be adapted to other cultural levels which are also necessary to acquire a translator’s cultural competence. As Koskinen (2015) noted, if in translator training the nation-state is the only cultural level addressed in the classroom, this may lead to the development of stereotypes and a dualistic view of the world.

To conclude, we would like to stress the need to triangulate the data obtained with this instrument with those obtained with other instruments to provide a more comprehensive representation of the cultural knowledge of students (in the case of training) or participants (in the case of research) who complete this questionnaire, since cultural knowledge can be very complex and a single questionnaire is not sufficient to measure it.

Appendices

Appendix

Cultural declarative knowledge questionnaire (English version)

The purpose of this questionnaire is to describe the participants’ knowledge of German culture.

Searching for information on the internet is not allowed.

Mistakes will not be penalised, so please do not leave any questions unanswered.

-

What inscription can be read on the facade of the Bundestag?

Dem Deutschen Volke

Der Deutschen Einheit

Dem Deutschen Lande

Den Deutschen Helden

-

What was the Stasi?

The secret police service of the Third Reich

A famous German monument

The secret service of the Democratic Republic of Germany

A German sports club

-

On September 1, 1939 the Second World War began with the occupation of Poland by the Wehrmacht. The Wehrmacht capitulated…

On May 8, 1945

On August 6, 1945

On August 18, 1945

On September 2, 1945

-

Which of the following aspects do Germans appreciate the most?

Organisation and planning

Spontaneity and improvisation

Distrust and prudence

-

In the Federal Republic of Germany, married couples are allowed to divorce, although the couple is required to complete a separation year (Trennungsjahr). What does this entail?

The separation process takes one year.

The spouses must be married for a minimum of one year.

The right of access to the children lasts one year.

The spouses must live separately for at least one year.

-

In general, in conversations between Germans, opinions and arguments…

are expressed indirectly to be inferred by the interlocutor.

are expressed using detours to avoid direct confrontation.

are expressed directly to facilitate understanding of the message.

are avoided so as not to disturb the other participants.

-

What is the average distance between two Germans in a conversation?

Approximately two arms’ length

Approximately one arm’s length

Approximately one hand’s width

Approximately half an arm’s length

-

What year is the current German Constitution from?

From 1929

From 1949

From 1969

From 1989

-

The President of the Federal Republic of Germany is not directly elected by the people. Who elects him/her?

The Federal Government of the Federal Republic of Germany

The Federal Council of the Federal Republic of Germany

The Federal Assembly of the Federal Republic of Germany

The Federal Parliament of the Federal Republic of Germany

-

In what situation is it socially acceptable to address someone as “du”?

When a teacher addresses a pupil in primary school

When addressing a lower-ranking worker

When addressing a waiter in a bar

When addressing an official body

-

What is the current governing coalition in Germany?

CDU + FDP

CSU + SPD

CDU/CSU + SPD

CDU/CSU + FDP

-

On what day did the reunification of the Federal Republic of Germany and the Democratic Republic of Germany take place?

On October 7, 1989

On November 9, 1989

On October 3, 1990

On December 2, 1990

-

Which of the following options contains only Länder that belonged to the Democratic Republic of Germany?

North Rhine-Westphalia, Hesse, Mecklenburg-Vorpommern

Mecklenburg-Vorpommern, Saxony-Anhalt, Thuringia

Bavaria, Baden-Württemberg, Rhineland-Palatinate

Saxony, Hesse, Lower Saxony

-

What is the political orientation of the Nationaldemokratische Partei Deutschlands (NPD) political party?

Far-left

Left

Right

Far-right

-

Who founded the Bauhaus art school in 1919?

Paul Klee

Ludwig Mies van der Rohe

László Moholy-Nagy

Walter Gropius

-

Next to which river can the Lorelei Rock be found?

The Danube

The Rhine

The Elbe

The Spree

-

At what age is the Grundschule usually completed?

Between seven and a half and eight and a half

Between eight and a half and nine and a half

Between nine and a half and ten and a half

Between eleven and a half and twelve and a half

-

What treaty did the Federal Republic of Germany and other states sign for the creation of the European Economic Community?

The Treaty of Hamburg

The Treaty of Rome

The Treaty of Paris

The Treaty of London

-

Which is the highest peak in Germany?

Zugspitze

Hochvogel

Kahlersberg

Hoher Göll

-

The Hauptschule…

prepares students for university.

prepares students for secondary school.

prepares students for vocational training.

prepares students for professions related to health and business.

-

In general, in Germany, interpersonal relations in the workplace…

are marked by the hierarchy of the workplace.

are as close as friendly interpersonal relationships.

are based on informality.

are usually avoided to avoid interfering with work.

-

With which three countries does Germany share a border?

with Poland, Liechtenstein and Austria

with France, the Netherlands and Hungary

with the Czech Republic, Luxembourg and Belgium

with Slovakia, Switzerland and Denmark

-

Which of the following statements is false? In Germany, …

time-planning and punctuality are given great importance.

recycling and care for the environment are given great importance.

the reconciliation of work and family life is considered secondary to work.

social norms and role models are respected.

-

The Dirndl is typical of which Land?

Bavaria

Bremen

Saarland

Thuringia

-

What form of government is there in Germany?

Republic

Monarchy

Dictatorship

-

For which of the following packages is there no deposit (Pfand) required?

For water bottles

For wine bottles

For beer bottles

For soft drink cans

-

Who wrote Der Zauberberg (The Magic Mountain)?

E. T. A. Hoffmann

Friedrich Schiller

Erich Kästner

Thomas Mann

-

The elections to the Bundestag are…

elections to the Federal Chancellery.

elections to regional parliaments.

the German parliamentary elections.

elections to the Federal Presidency.

-

What change in Germany’s energy model is expected to be implemented by 2022?

To eliminate solar energy.

To abandon nuclear energy progressively.

To extract gas in the Arctic.

To introduce fracking in the Länder.

-

Usually, Germans…

tend to be flexible in the event of unforeseen events.

tend to avoid unforeseen events through planning.

tend to improvise what to do when unforeseen events arise.

never experience unforeseen events.

[Correct answers at the time of conducting the quasi-experiment: 1-a; 2-c; 3-a; 4-a; 5-d; 6-c; 7-b; 8-b; 9-c; 10-a; 11-c; 12-c; 13-b; 14-d; 15-d; 16-b; 17-c; 18-b; 19-a; 20-c; 21-a; 22-c; 23-c; 24-a; 25-a; 26-b; 27-d; 28-c; 29-b; 30-b

Classification of the items by cultural area:

Organisation of the natural environment: 13, 16, 19, 22

Organisation of the cultural patrimony: 1, 3, 9; 12, 15, 18, 27

Organisation of the society: 2, 5, 8, 11; 14, 17, 20, 24, 25, 26, 28, 29

Models of behaviour, values and ideas: 4, 6, 7, 10, 21, 23, 30]

Notes

-

[1]

Bundesamt für Migration und Flüchtlinge (2012): Der Einbürgerungstest. Consulted 10 December 2017, <http://www.bamf.de/DE/Willkommen/Einbuergerung/WasEinbuergerungstest/waseinbuergerungstest-node.html>.

-

[2]

EMT Expert Group (2009): Competences for professional translators, experts in multilingual and multimedia communication. Brussels: Directorate-General Translation, European Commission. Consulted on August 25, 2019, <https://ec.europa.eu/info/sites/info/files/emt_competences_translators_en.pdf>.

-

[3]

Conférence internationale permanente d’instituts universitaires de traducteurs et interprètes (Last update: 12 September 2007): Our Profile. CIUTI. Consulted on December 10, 2017, <http://www.ciuti.org/about-us/profile/>.

-

[4]

Promoting Intercultural Competence In Translators (2012): Intercultural competence: Curriculum framework. PICT. Consulted on December 10, 2017, <http://www.pictllp.eu/download/curriculum/PICT-CURRICULUM_ENGLISH.pdf>.

-

[5]

Hess, Jürgen C. (2010): Duden Allgemeinbildung – Testen Sie Ihr Wissen! 1.000 Fragen und 4.000 Antworten [Duden General Education - Test your knowledge of Germany! 1,000 questions and 4,000 answers]. Mannheim: Duden.

-

[6]

Schroll-Machl, Sylvia (2002): Die Deutschen - Wir Deutsche. Fremdwahrnehmung und Selbstsicht im Berufsleben [The Germans - We Germans. External perception and self-awareness in professional life]. Göttingen: Vandenhoeck & Ruprecht.

-

[7]

From the academic year 2011/2012 onwards, the Faculty of Translation and Interpreting at the Universitat Autònoma de Barcelona uses the Dialang tests to assess the levels of both first and second foreign languages. In 2014 (the year in which the pilot study was conducted), the level of the students of German as a first foreign language was C1.1 and that of the students of German as a second foreign language was C1.1-2. Source: internal documentation from the Faculty of Translation and Interpreting - UAB.

-

[8]

The equality of variances was verified with the Levene test.

-

[9]

We checked whether the consistency coefficient was normally distributed using the Shapiro-Wilk test and Q-Q graphs. The equality of variances was tested with the Levene test.

-

[10]

We checked whether the probability index of correct answer was normally distributed using the Shapiro-Wilk test and Q-Q graphs.

Bibliography

- Babbie, Earl (1990): Survey research methods. Belmont: Wadsworth Publishing Company.

- Bahumaid, Showqi (2010): Investigating cultural competence in English-Arabic translator training programs. Meta. 55(3):569-588.

- Bell, Roger T. (1991): Translation and Translating. London: Longman.

- Berenguer, Laura (1998): La adquisición de la competencia cultural en los estudios de traducción. Quaderns: Revista de traducció. 2:119-129.

- Cao, Deborah (1996): Towards a model of translation proficiency. Target. 8(2):325-340.

- Clouet, Richard (2008): Intercultural language learning: cultural mediation within the curriculum of translation and interpreting studies. Ibérica. (16):147-167.

- DeVellis, Robert F. (1991): Scale development. Theory and applications. Newbury Park: SAGE Publications.

- Gutiérrez Bregón, Silvia (2016): La competencia intercultural en la profesión del traductor: aproximación desde la formación de traductores y presentación de un estudio de caso. Trans. Revista de traductología. (20):57-74.

- Hansen, Gyde (1997): Success in translation. Perspectives. 5(2):201-210.

- Hurtado Albir, Amparo (1996): La enseñanza de la traducción directa ‘general.’ Objetivos de aprendizaje y metodología. In: Amparo Hurtado Albir, ed. La enseñanza de la traducción. Castellón de la Plana: Universidad Jaume I, 31-55.

- Hurtado Albir, Amparo and Olalla-Soler, Christian (2016): Procedures for assessing the acquisition of cultural competence in translator training. The Interpreter and Translator Trainer. 10(3):318-342.

- Katan, David (1999): Translating cultures. An introduction for translators, interpreters and mediators. Manchester: St. Jerome.

- Katan, David (2008): University training, competencies and the death of the translator. Problems in professionalizing translation and in the translation profession. In: Maria Teresa Musacchio and Geneviève Henrot, eds. Tradurre: formazione e professione [Translating: training and profession]. Padua: CLEUP, 113-140.

- Katan, David (2009): Translator training and intercultural competence. In: Stefania Cavagnoli and Elena Di Giovanni, eds. La ricerca nella comunicazione interlinguistica. Modelli teorici e metodologici [Research on interlinguistic communication. Theoretical and methodological models]. Milan: Franco Angeli, 282-301.

- Kelly, Dorothy (2002): Un modelo de competencia traductora: bases para el diseño curricular. Puentes. (1):9-20.

- Kelly, Dorothy (2005): A Handbook for translator trainers. Manchester: St. Jerome.

- Kelly, Dorothy and Soriano, Inmaculada (2007): La adquisición de la competencia cultural en los programas universitarios de formación de traductores: el papel de la movilidad estudiantil. Тетради Переводчика. (26):208-111.

- Kiraly, Donald C. (1995): Pathways to translation. Pedagogy and process. Kent: The Kent State University Press.

- Koskinen, Kaisa (2015): Training Translators for a Superdiverse World. Translator’s Intercultural Competence and Translation as Affective Work. Russian journal of linguistics. (4):175-184.

- Litwin, Mark S. (1995): How to measure survey reliability and validity. Thousand Oaks: SAGE Publications.

- Molina, Lucía (2001): Análisis descriptivo de la traducción de los culturemas árabe-español. Doctoral thesis, unpublished. Bellaterra: Universitat Autònoma de Barcelona.

- Muñiz, José, Fidalgo, Ángel M., García-Cueto, Eduardo, et al. (2005): Análisis de los ítems. Madrid: La Muralla.

- Neubert, Albrecht (2000): Competence in language, in languages, and in translation. In: Christina Schäffner and Beverly Adab, eds. Developing translation competence. Amsterdam/Philadelphia: John Benjamins, 3-18.

- Nord, Christiane (1988): Textanalyse und Übersetzen. Theoretische Grundlagen, Methode und Didaktische Anwendung einer übersetzungsrelevanten Textanalyse [Text analysis and translation. Theoretical foundations, methods, and didactic applications of translation-relevant text analysis]. Heidelberg: J. Gross Verlag.

- Olalla-Soler, Christian (2017a): La competencia cultural del traductor y su adquisición. Un estudio experimental en la traducción alemán-español. Doctoral dissertation, unpublished. Bellaterra: Universitat Autònoma de Barcelona.

- Olalla-Soler, Christian (2017b): Un estudio experimental sobre la adquisición de la competencia cultural en la formación de traductores. Resultados de un estudio piloto. Meta. 62(2):435-460.

- Olalla-Soler, Christian (2019): Applying internalised source-culture knowledge to solve cultural translation problems. A quasi-experimental study on the translator’s acquisition of cultural competence. Across Languages and Cultures. 20(2): 253-273.

- Olk, Harald M. (2003): Cultural knowledge in translation. ELT journal. 57(2):167-174.

- Olk, Harald M. (2009): Translation, cultural knowledge, and intercultural competence. Journal of intercultural communication. 20. Consulted 13 April 2019, http://www.immi.se/intercultural/.

- PACTE (2003): Building a translation competence model. In: Fabio Alves, ed. Triangulating translation: Perspectives in process oriented research. Amsterdam/Philadelphia: John Benjamins, 43-66.

- Rossi, Peter H., Wright, James D., and Anderson, Andy B., eds. (1983): Handbook of survey research. Orlando/London: Academic Press.

- Shreve, Gregory M. (2006): The deliberate practice: Translation and expertise. Journal of translation studies. 9(1):27-42.

- Witte, Heidrun (2000): Die Kulturkompetenz des Translators [The cultural competence of the translator]. Tübingen: Stauffenberg Verlag.

- Witte, Heidrun (2005): Traducir entre culturas. La competencia cultural como componente integrador del perfil experto del traductor. Sendebar. (16):27-58.

- Yarosh, Maria (2012): Translator intercultural competence: The concept and means to measure the competence development. Doctoral dissertation, unpublished. Deusto: University of Deusto.

- Yarosh, Maria and Muies, Larry (2011): Developing translator’s intercultural competence: a cognitive approach. Redit. (6):38-56.

List of figures

Figure 1

Example of an item and the semantic differential used by the experts in their judgement

Figure 2

Histogram of the probability index of correct answer

Figure 3

Distribution of the index of correct answer by sample group

Figure 4

Scatter plot with regression lines for the index of correct answer and the probability index of correct answer by sample group

List of tables

Table 1

Example of item analysis after expert judgement

Table 2

Sample used to measure content validity of the declarative knowledge questionnaire on German culture

Table 3

Calculation example of the index of correct answer of the subjects

Table 4

Calculation example of the consistency coefficient for each item

Table 5

Mean and standard deviation of the index of correct answer by sample group

Table 6

Descriptive statistics of the consistency coefficient between the probability index of correct answer and the index of correct answer for each sample group

Table 7

Pearson’s correlation between the index of correct answer and the probability index of correct answer for each sample group

Table 8

Example of face validity analysis for three items

10.7202/045078ar

10.7202/045078ar