Résumés

Abstract

Injuries are common over a performing musician’s career and wrist injuries are the most frequent site of pain for pianists. Although general recommendations insist on keeping wrists in a “neutral” position to avoid injury, this is rarely done in practice. Recent advances in motion capture technology may aid in raising students’ awareness of the propensity to use wrist positions outside of the recommended “neutral.” These technologies may be used to measure precise wrist positions in piano playing in order to set specific thresholds for avoiding injury. This paper discusses various advantages and limitations of motion capture technologies, including data visualization and usage within the music instrument pedagogy framework in order to define a set of requirements for an accessible motion-tracking system. A prototype of a dedicated image-processing-based system with a graphical user interface that meets these requirements is described. This system uses passive coloured markers and a standard 3D camera, encouraging use outside the traditional laboratory environment. Simple camera calibration options and basic hand tracking from aerial view images allow monitoring of wrist flexion/extension over short video recordings. Measurements are compared to flexion/extension thresholds recommended for typists to prevent carpal tunnel pressure, and moments of approaching or exceeding these thresholds are flagged to the user both in real time and in post-performance. Potential applications include monitoring the practice of short technical passages without restriction of instrument or location.

Résumé

Les interprètes doivent gérer des blessures tout au long de leur carrière, et chez les pianistes, ce sont surtout les blessures aux poignets qui les font particulièrement souffrir. Alors que les recommandations générales insistent sur la position « neutre » du poignet pour éviter les blessures, elles sont rarement appliquées dans la réalité. De récentes avancées technologiques dans le domaine de la capture de mouvements pourraient aider les étudiants à être plus conscients de leur propension à dépasser les limites de la position « neutre » recommandée. Ces technologies pourraient être utilisées pour mesurer les positions précises du poignet durant le jeu pianistique afin de déterminer des ensembles de seuils spécifiques qui permettront d’éviter les blessures. Cet article présente les avantages et les inconvénients des technologies de la capture de mouvement, incluant la visualisation des données et leur utilisation dans le cadre de la pédagogie instrumentale, afin d’établir une liste de critères auxquels répondrait un système d’analyse des mouvements facile à utiliser. L’article présente un prototype de système de traitement des images spécialisé accompagné d’une interface graphique pour les utilisateurs qui répond à cette liste de critères. Le système recourt à des marqueurs de couleur passifs et à une caméra 3D standard afin d’encourager une utilisation en dehors des environnements de laboratoire traditionnels. Des options de calibrage simples pour la caméra, ainsi qu’une localisation de base des mains grâce à des images aériennes permettent d’observer les flexions et extensions des poignets dans de courts enregistrements vidéo. Les données sont comparées à des seuils de flexion/extension proposés pour les dactylos afin de prévenir les problèmes de tunnel carpien, et les utilisateurs sont avertis lorsqu’ils approchent ou dépassent ces seuils à la fois pendant et après l’enregistrement. Les applications potentielles du système incluent la surveillance durant la répétition de courts passages techniques, sans restriction d’instrument et de lieu.

Corps de l’article

Nearly 85 per cent of musicians may suffer from playing-related pain over their lifetimes (Silva, Lã, and Afreixo 2015), with a high percentage of professional musicians and conservatory students reporting performance-impairing pain or performance-related musculoskeletal disorders (PRMDs).[1] Although performance-related injuries are common, scarce data exists examining and/or defining the precise limits of “good” body posture and movement in order to minimize the risk of incurring injury (MacRitchie 2015). The recent increase of accessible and user-friendly forms of motion capture technologies may be key in aiding the study of these issues in the pianist population (Metcalf et al. 2014), but a number of issues and limitations remain regarding both the design (cost, portability, accuracy) of such technologies and their implementation (usefulness) within lessons. This paper describes an efficient wrist motion capture system that satisfies these requirements, particularly with regards to ease of operation and useful data visualizations. A prototype is proposed to measure specific parameters of wrist flexion/extension and rotation in piano performance.

In this paper we also discuss the following: 1) common pianists’ injuries and techniques for determining precise posture thresholds, 2) state-of-the-art performance monitoring systems and 3) the implications for teachers, pianists and researchers of using such technologies.

Piano Performance Injuries and Determining Posture Thresholds

For pianists, the wrists are the most common site of pain (Pak and Chesky 2001). Females players are likely to have more occurrences of pain than male players, and the frequency of occurrence of pain (across the range of mild to severe pain) decreases with age. Conversely, older pianists report more frequent occurrences of severe pain. Pak and Chesky suggest that this may reflect a pattern whereby mild pain occurrences in early life eventually lead to severe pain in later life. As pianists who practice the most regularly and the longest are likely to develop PRMDs, Allsop and Ackland (2010) propose that a combination of overuse and misuse is the main cause of injury. The frequency of these injuries in pianists suggests that more information is required regarding the limitations of wrist angles for continued healthy playing. To acquire this information on a large scale, researchers must be able to monitor performances easily, outside of laboratory environments. This would not only provide more data on the range of wrist angles across various individuals and various technical tasks in piano playing, but would also help raise awareness among piano students with regards to the strain applied to their wrists in daily practice. As students will likely approach their instrumental teacher first with questions about health problems (Williamon and Thompson 2004), motion capture technology can be used in a teaching environment to monitor wrist postures that may lead to injury, and to stimulate discussion between teacher and student about wrist angles.

Wrist injuries can occur in any situation in which there are many repetitive movements that cause the wrists to be in a state of extension/flexion or of ulnar or radial deviation.[2] Conditions such as carpal tunnel can occur from increased pressure due to wrist extension and flexion (Rempel, Keir, and Bach 2008), which occurs in fine motor activities such as typing or piano playing (Sakai 1992). Keir et al. (2007) measured the relationship between wrist angle and carpal tunnel pressure in typing. Their work resulted in a set of specific wrist posture guidelines: the 25th percentile angle measurements for wrist extension (26.6°) and flexion (37.7°), and radial (17.8°) and ulnar deviation (12.1°), should protect 75 per cent of the population from developing carpal tunnel pressure of 25 mmHg. In other words, out of 100 typists, 75 would not develop significant carpal tunnel pressure if their movements were kept within the above angle thresholds. Further study by these authors demonstrated that varying angles of wrist posture either in extension (30°) or radial deviation (15°) were also related to increased carpal tunnel fluid pressure for typists averaging over 20 hours per week keyboard usage (Rempel et al. 2008). It is unknown whether these thresholds would apply directly to piano playing. Piano playing frequently requires fine motor movements that are rare or nonexistent in typing tasks, e.g. pressing multiple keys at once (as in consecutive thirds, see Lee 2010), or using different forces across keypresses (to achieve different loudness levels, see Furuya et al. 2012; Kinoshita et al. 2007). Piano playing technique is also informed by different “schools” of playing which may recommend focus on one or many parts of the arm (Wheatley-Brown 2011). Until the wrist angle thresholds that lead to injury have been defined, a system providing feedback on these movements to pianists and teachers can either use arbitrary categorisations or use the thresholds for typists stated in Keir et al. (2007). The prototype described in this paper takes the latter approach.

Terminology concerning the physical nature of piano playing is often confusing and definitions are vague in the pedagogy literature (Wheatley-Brown 2011; Wristen 2000). Although piano pedagogues are demonstrate a growing awareness of the implications of increasing tension through bad movement habits, three recent publications (Berman 2000; Fraser 2011; Mark 2003) demonstrate that pianists still receive only general guidelines on the use of the wrist. Fraser (2011) acknowledges that wrist height is important in minimising tension, noting that the ‘low’ wrist (extension) is undesirable and blocks the force travelling between arm and fingers. Mark (2003) describes the wrist as “long and flexible” rather than a hinge, and emphasizes “neutral” wrist positions that are not “stiff.” The wrist is most often mentioned in terms of the benefits of vertical/lateral movement and rotation, which free the fingers to play more complicated technical passages (Berman 2000; Fraser 2011).

Although neutral wrist positions are recommended to reduce the rate of PRMDs (Oikawa et al. 2011), pianists actually stay in the neutral wrist position during only a small percentage of playing time (between 0-7.5 per cent for the left hand and between 0.4-13.2 per cent for the right hand) (Savvidou et al. 2015).[3] This suggests that current pedagogical advice does not provide adequate tools for the student pianist to develop a healthy technique. Pianists with a smaller hand span are also more likely to use more wrist flexion/extension when playing chords than those with a larger hand span (Lai et al. 2015) unless they have the opportunity to play on a smaller sized keyboard (Wristen et al. 2006). As pointed out by Allsop and Ackland (2010), these classifications regarding hand-span or hand size are often arbitrary.

The commonality of PRMDs, the arbitrary classifications of wrist angle positions, and the scarce evidence to date concerning potential contributing factors such as hand size give reason to seek techniques and technologies that will support evidence-based pedagogies.

Designing an Accessible Motion Capture System for Music Instrument Education

In evaluating the suitability of current technologies for an accessible motion capture system, the following elements are considered to determine a set of system requirements: 1) the cost, portability, efficiency and operation of motion capture technologies used to measure wrist position/angle, 2) the immediacy and usefulness of data visualization in giving feedback to the performer, and 3) how the technology can fit into pedagogical frameworks. This third element is crucial to the implementation of these systems in lessons and rehearsals.

Technologies to Measure Wrist Joint Angles

A variety of instruments can be used to measure either the joint angle at the wrist, or the strain put on the wrist extensor or flexor muscles during performance. However, few studies to date have measured wrist angles in piano playing. Lee focused on static measurements of pianists’ hand anthropometry (Lee 2010) and their relationship to performance features measured through MIDI. Other studies are based on motion capture, electro-goniometers or electromyography which are used to make real-time measurements of joint angles during performances of technical exercises or repertoire (Chung et al. 1992; Lai et al. 2015; Oikawa et al. 2011; Sakai et al. 2006; Savvidou et al. 2015; Wristen et al. 2006). Laboratory-based studies measuring wrist posture generally use goniometers (see studies measuring wrist and finger angles in typing, such as Treaster and Marras 2000) although studies based in the performance arts report using a range of multi-camera motion capture systems, data-gloves and accelerometers to measure wrist and finger angles (see Metcalf et al. 2014 for a review of technologies used to study finger/hand motion in the performance arts field). These solutions, particularly in the case of the multi-camera motion capture systems, are costly and not very portable. The technology can also be difficult to master for novice users. This may hamper their integration into music lessons.

Outside the laboratory, low-cost sensors have been developed to monitor hand postures “on the move” (Kim et al. 2012). 3D cameras (such as the Microsoft Kinect, or the Intel RealSense 3D camera), which often combine an RGB camera and a depth camera, have increased in accuracy and decreased in cost. As a result, researchers have developed applications that perform fast and accurate tracking on hand postures (e.g. Sharp et al. 2015). There are certain limitations with 3D cameras including line-of-sight and difficulty of depth detection in complex backgrounds. For instance, if a single camera is used, an object obscured to the camera view cannot be detected (e.g. hands crossed over each other). The use of multiple depth cameras may resolve this issue, though limitations with respect to the amount of processing power required and the combination of multiple camera images may limit an application’s use in contexts outside the laboratory.

The ideal environment for optimal object detection for a 3D camera is when the object in question is in front of a large, flat, stationary surface. The system described here is intended for activities with complex backgrounds (piano keys). This presents a problem, as multiple keys will be in motion during the activity. Proprietary object detection algorithms of most 3D cameras rely on edge detection involving depth values. This presents a problem, as in cases containing complex backgrounds with multiple keys, false object detection may occur. This is where the edge of a key is misidentified as part of the body. In addition, as infrared reflection of surfaces is used to estimate depth, poorly reflective surfaces (such as black keys on the piano) will measure a depth of -1 in the z-axis and may compromise the detection of other objects nearby (such as the fingers). To mitigate this limitation, the use of passive coloured markers can aid object detection for these environments. However, using coloured markers to increase accuracy requires that the user a) has coloured markers readily available and b) applies the markers correctly. In order to maintain an easy-to-use system, the number of markers should be limited.

Data Visualization

Once movement data is captured, it must be visualized simply for quick feedback to the performer. Motion capture systems recently used in music education contexts consider the timing when feedback is provided (online or offline) and the specificity of the data being visualized (raw visualizations vs. visualizations that compare performance to a template or threshold).

Savvidou et al. (2015) devised a system for pianists that uses the Kinect camera to track wrist postures and provides offline results—measured in relation to arbitrary thresholds for posture—to students. Motion capture systems for use in violin performance have utilized online (real-time) feedback of results through Hodgson plots that show the trajectory of the bow in relation to the bridge and the strings (Schoonderwaldt and Wanderley 2007). Ng (2008) used the concept of a “3D augmented mirror” to provide feedback to the performers so that both the performer and the performing environment could be visualized. Both systems (Schoonderwaldt and Wanderley 2007; Ng 2008) traced the trajectory of bow movements in real time so the performer could keep track of their motion patterns during performance. In the case of Ng (2008), feedback could be provided when a performance feature exceeded a certain threshold. For example, the user could program the 3D augmented mirror system to make a sound when the bow was not parallel to the bridge (Weyde et al. 2007). Although potentially useful, a user-defined threshold may only be beneficial if a teacher or student knows exactly which parameters to select and where to set the threshold. In the case of wrist postures, teachers and students do not have enough information to enable this type of user selection.

Pedagogical Frameworks for Using Motion Capture Technology

One crucial point to consider is how to integrate the use of technology into music lessons in such an effective way that it is useful over multiple occasions. Integration of motion capture into human performance tasks is not novel: these types of systems are used often to perfect golf swings or analyze running gaits. Then again, limitations in the cost and accessibility of some motion capture systems may require that music lessons be scheduled in a different location. For a system designed for use in everyday lessons, quick setup and teardown is essential, as is ease of use by the operator (teacher or student). A system designed to follow gesture via wireless sensors was used in a music theory class in order to compare student conductors’ gestures with pre-recorded reference gestures made by the teacher (Bevilacqua et al. 2007). According to the authors, “a single person was able to install and operate the system seamlessly” and students commented positively on the potential creative use of such a tool. Although no details were given regarding how useful the students found the application’s visualization of this gesture data (perhaps because the sonification of these gestures was sufficient to provide the feedback required), the authors achieved their aim of minimal disturbance in a normal music theory class.

In Gillies et al. (2014), one of the few user evaluation studies to examine the utility of technology in music instrument education specifically, teachers used a full body motion capture system based on the Kinect camera. This gave feedback to students on their posture and movement via a visualization of the performer’s skeleton. Teacher and student pairs for viola, percussion and conducting were examined. The teachers reported that the reduction of data required to create the visualization removed contextual cues that could be important to the performance. As a result, the teachers concluded that the system was only useful for monitoring gross postural problems. Based on this feedback, the authors redesigned the visualization so that it incorporated the original video images and opened up the functionality of the monitoring tools so that users could perform their own manual correction of the recorded data. The teachers reported that this version of the system provided a richer view of the musical gestures, and the ability to perform manual correction on the data encouraged them to better trust the technology. The authors concluded that there could be advantages to using video and motion capture in tandem rather than in isolation. These studies provide guidelines for system design: systems should be easy to install and to use, and contextual information should not be removed entirely in data visualization.

It is still necessary to evaluate the usefulness of systems in order to determine how they might fit into a specific pedagogy practice. In this article, we propose a prototype system based on a set of user requirements identified in the literature. Nevertheless, the usefulness of this system will only be determined subsequent to further user evaluation (currently outside the scope of this paper). The i-Maestro project details user involvement via a list of user specifications for the system (Weyde et al. 2007) and plans to validate the final system through European tertiary institutions; however, few details of user studies with these types of systems are detailed in the literature. Current work is being conducted through the TELMI European project (Technology Enhanced Learning of Music Instrument Performance[4]) to design and evaluate interaction paradigms with multimodal technology.

Designing a Prototype

With the aim of designing an accessible motion-tracking system for pianists and their teachers to use to monitor wrist angle movement, we have identified the three following requirements.

1) Technology and Operation

The system must be cost-effective, easily portable, and easily operated by the teacher/student. The Intel RealSense 3D camera is chosen as an example of a commercially available hardware tool that is easily portable. A user interface will be designed to accommodate simple recording and visualization.

2) Data Visualization

The system must make use of both online and offline visualizations to allow for both real-time and post-performance monitoring. Given that specific wrist angle thresholds are not yet standardized, the system will make use of both online and offline data and will compare the current measured wrist angle with thresholds defined by Keir et al. (2007). These can be adjusted when more information is collected regarding wrist angle occurrences and their relationship to injury occurrence and severity in piano playing.

3) Pedagogical Framework

The system is required to be time-efficient, so that it does not dominate an entire lesson, but can be incorporated quickly as needed. Setup and teardown of technology and any associated items (markers) should be quick.

Prototype System Setup

The system described uses a single 3D camera to detect hand postures and calculates wrist flexion/extension for monitoring through the user interface. Monitoring can be conducted either in real time or offline.

Hardware

The Intel RealSense camera (model F200) operates similarly to other 3D cameras, which provide both a colour and a depth image; the estimation of depth relies on infrared reflection. Although the colour camera provides the option of HD resolution (1280x720 or 1920x1080), the infrared (IR) camera has a maximum resolution of 640x480. The IR camera is able to reach up to 60 frames per second (fps) when operating alone; the maximum frame rate of the colour camera is 30 fps. Accounting for online processing for the described application reduces this rate to approximately 11 fps during operation of the system. The stated range of the RealSense camera is between 0.2 and 1.2 meter; for proprietary algorithms covering hand detection, the range is reduced to 0.2-0.8 meter. During operation of the system, we find that the ideal range is 0.3-1 meter for general object detection. This is again reduced to a range of 0.3-0.8 meter for specific data point detection.

Software

The application was written in C++ using the Intel RealSense SDK (R3) for depth values and real-world coordinates, Intel OpenCV (2.4.11) for image processing libraries and Qt (5.4.2) to design the user-interface.

Tracking Coloured Markers

The algorithm to track the passive coloured markers involves the following three stages: 1) background subtraction (of the depth image); 2) creating a colour mask and 3) blob detection.

For background subtraction, a reference frame of the background (the piano keys) free from the tracked objects (the hands) is recorded from the depth camera. A threshold is applied that excludes every pixel that is less than 5 millimeters closer than the objects present in the reference frame. Subsequently the RGB image is converted to HSV colour space and colour thresholding is applied to produce a binary image mask. This mask is smoothed using a median filter and used by the blob detection function to locate regions of connecting pixels. These markers are then identified based on their y-ordinate (height) placement within the frame.

Marker Placement and Rotation Calculations

Figure 1 shows the placement of three markers on a) the centre of the forearm, b) the centre of the wrist joint and c) the metacarpal joint of the middle finger. Once the marker coordinates have been detected using the algorithm described in the previous section of this article, the application calculates the flexion/extension angles. Using the following steps, extension is reported as an angle with a positive sign and flexion is reported as an angle with a negative sign. When the wrist is in neutral position, the angle is reported as zero.

Figure 1

Two diagrams showing marker placement. i) Marker placement at a) the centre of the forearm, b) the centre of the wrist joint and c) the metacarpal joint of the middle finger. ii) Projection of point pC using marker positions A and B.

The angle ABC (θ) is calculated (Equation 1) using the dot product of normalised vectors AB and BC.

Equation 1

Equation to calculate angle ABC (θ)

To determine whether the wrist is in a state of flexion or extension, the line segment AB is extended along its axis to project point pC (see Figure 1). Point pC is where point C would occur should the wrist be in a neutral position (0 degrees). If the depth of point C is greater than the depth of the projected point pC, then the wrist is in extension. If not, flexion is assumed. The degree of flexion/extension FEangle is then calculated as the difference between angle ABC and 180 degrees (Figure 2). This algorithm is accurate if the plane formed by vector AB is parallel to the x-y plane, i.e., the depth values of A and B are equal. If not, it is currently an approximation. Although pronation/supination of the wrist[5] is assumed to affect accuracy only by altering the visibility of the markers to the camera, further testing is required to establish the full effects of wrist rotation on the calculated angle.

User Interface

The user interface to this depth camera tracking system was designed to allow monitoring of wrist flexion/ extension during either online or offline operation.

Figure 2

Algorithm showing how the value of extension or flexion is calculated from the angle detected by the application.

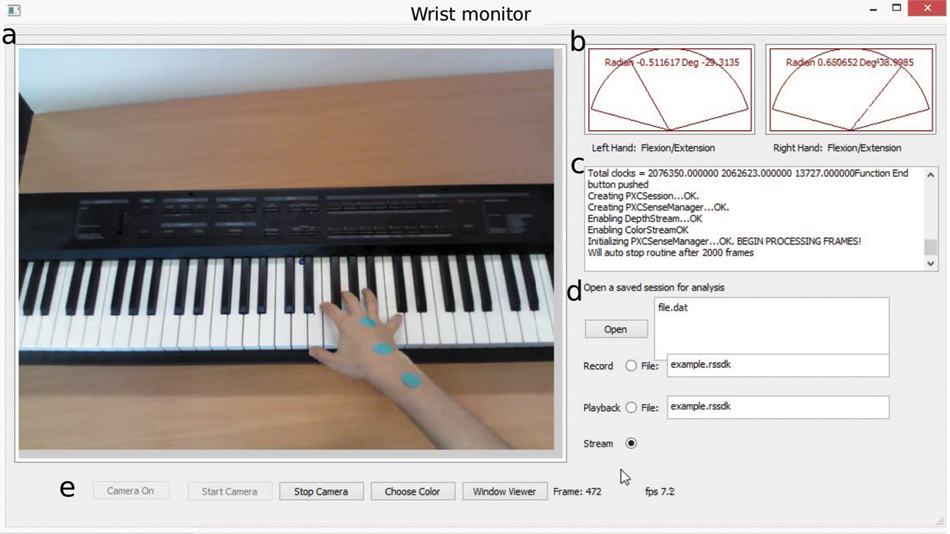

Figure 3

User Interface Camera Dialog, clockwise from top left: a) tracking window, b) online data visualization dials, c) log window, d) streaming/file playback options and e) start/stop and colour threshold controls.

Figure 4

Threshold dialog allowing user control of HSV thresholds via sliders. Point detection and the colour thresholding used to perform the operation are shown in the two displayed windows.

Figure 5

Two screenshots of the right wrist. i) The wrist is in a slightly extended position. The angle of extension falls within the threshold so the visualization of the data is green. ii) The wrist is in an overly extended position. The angle of extension exceeds the threshold so the visualization of the data is red.

Operation

Figure 3 shows the camera dialog presented to the user. This dialog offers options to stream, record directly to file, or play a pre-recorded file, allowing for use online as a piano task is performed, or for an offline evaluation either during a lesson or at home. Options are provided to adjust colour thresholds by using slider bars and monitoring the output, so that the system can be easily adapted to any lighting situation (Figure 4). This contributes to the portability of the system. Ideally, values will be set once and then retained for future use in order to allow for quick setup and teardown in the same location. Students may find it necessary to adjust the values for different practice venues.

As the detection relies on tracking from a reference frame, the first frames should be of the background only. Real-time visualization of the wrist angle of each hand in relation to typing thresholds is displayed via the dials. Upon pressing the “Stop Camera” button, the offline visualization of the entire recorded session is displayed.

Data Visualization

The angle of flexion/extension of each wrist is represented in both an online and an offline format. In the online format, data is shown via two dials on the right-hand side of the main dialog. The dial needles are fully vertical, indicating zero degree flexion/extension, when the hand is in a fully neutral position. The needles point to the left when the wrist is in flexion and to the right when the wrist is in extension. The dial’s colour is manipulated based on the value of the current wrist angle compared to the safety thresholds stated in Keir et al. (2007). When the angle of flexion/extension is below the threshold, i.e., in an acceptable posture, the dial will be coloured green. When this threshold is exceeded, the dial will be coloured red. Figure 5 shows screenshots of this online visualization of the right hand in two states of extension: acceptable (green dial, top picture) and exceeding thresholds (red dial, bottom picture).

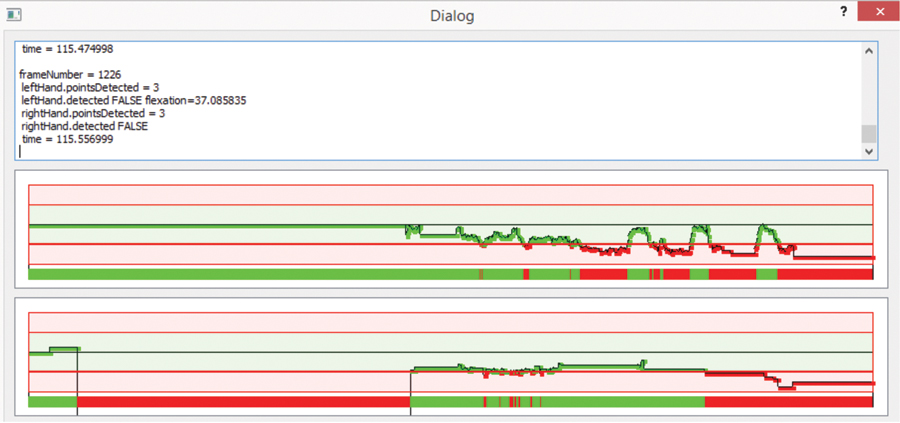

Figure 6

Offline data visualization of a sample video stream. The first rail shows data for detection of the right hand and the second rail shows data for detection of the left hand. Values in green are those that fall below the threshold safety values of flexion/extension. Red values are those that exceed these thresholds.

The offline visualization shown in Figure 6 is in the form of a graph where the middle rail represents zero (or neutral position). The positive values represent wrist extension and the negative values represent wrist flexion. Again, the green values reflect those angles calculated within the recommended thresholds and red values reflect those angles calculated to exceed the thresholds.

Conclusion

In this article we define a set of requirements for technologies that will increase knowledge of wrist angle movements in piano performance, and present a prototype based on these requirements. The prototype grabs images recorded from a commercially available 3D camera and analyses the flexion/extension in each wrist based on the detected positions of coloured markers. The recorded data, based on proximity to maximum angle thresholds, is visualized in both real-time and offline forms, allowing the user to monitor actions either as they perform them or after the event.

In order to improve the current operation of the application, the following steps are required:

improve accuracy of hand detection in complicated backgrounds so that the application can be used in various environments;

use additional markers on the hand and forearm such that radial/ulnar deviation can also be calculated;

perform validation tests with accelerometers or multi-camera setups to estimate the error in the calculation of wrist angle for varying marker placement, and for supination/pronation wrist rotations.

Although the visualization in the user interface takes into account comments made by students and teachers in Gillies et al. (2014), the usefulness of this type of visualization needs to be assessed in practice both by teachers using it as an educational aid, and by students using it to rehearse technically difficult passages. Determining the function of both online and offline visualizations within the rehearsal or teaching process will enable improvements in the representation of this information.

Few music pedagogy applications offer feedback that clarifies postures in relation to recommended benchmarks. In part, this is due to the lack of information available on occurrences of wrist postures specific to music performance, and on the likelihood that these may lead to injury. Data exists linking certain wrist angles of flexion/extension to carpal tunnel pressure in typing (Rempel et al. 2008) and these are currently used as thresholds for comparison. In the future, further data collection and analysis of the wrist angles used in performance by multiple individuals and across a variety of repertoire will help to provide more accurate specifications for this particular set of tasks.

Further user evaluation is required to discover the usefulness of this particular application but designing feedback systems for use in music pedagogy already raises questions about how technology can be positively incorporated into music instrument teaching and learning. How can the reduction and visualization of information through motion capture be useful for injury prevention? As Savvidou et al. (2015) argue, this technology may be used to raise awareness among, and start a conversation between, students and teachers in order to minimize the risk of injury.

Parties annexes

Biographical notes

Jennifer MacRitchie

Dr. Jennifer MacRitchie is a senior research fellow in Health and Wellbeing at Western Sydney University. With a background in both electrical engineering and music, her research focuses on the acquisition and development of motor skills in piano performance. Studies range from looking at movements from novices to experts, from those who have studied music from a young age to those who are rediscovering music in retirement. Jennifer has published several articles on the design of multimodal capture systems to analyze musical performance. She serves as associate editor of Frontiers in Psychology, Performance Science, and is on the editorial board of Musicae Scientiae.

Christopher Baylis

Christopher Baylis completed the programming of this motion tracking user-interface as an undergraduate student at Western Sydney University, under the supervision of Jennifer MacRitchie.

Notes

-

[1]

For orchestral members, see Ackermann, Driscoll, and Kenny 2012; Kenny and Ackermann 2016. For pianists, see Allsop and Ackland 2010; Bragge 2006; Zaza 1998. For conservatory students, see Williamon and Thompson 2004.

-

[2]

Flexion of the wrist occurs when the palm is bent down towards the wrist; extension is the opposing movement that occurs when the back of the hand is raised. Ulnar deviation is bending of the wrist towards the little finger; radial deviation is the same bending towards the thumb.

-

[3]

Savvidou, Willis, Li, and Skubic classify neutral, moderate and extreme positions for wrist flexion as between 0-7°, between 7-16° and above 16°, respectively, although reasons for these particular thresholds are not detailed. The same thresholds, though with negative degrees, are given for extension. There is no evidence to suggest a relationship between these specific thresholds in piano playing and levels of wrist pressure or incidence of injury experienced by the pianists.

-

[4]

TELMI-Technology Enhanced Learning of Musical Instrument Performance, http://telmi.upf.edu, accessed November 5, 2017.

-

[5]

Supination of the wrist occurs when the forearm is rotated such that the palm faces upwards; pronation of the wrist occurs when the forearm is rotated such that the palm faces downwards.

Bibliography

- Ackermann, Bronwen, Tim Driscoll and Diana T. Kenny (2012). “Musculoskeletal Pain and Injury in Professional Orchestral Musicians in Australia”, Medical Problems of Performing Artists, vol. 27, no 4, pp. 181–187.

- Allsop, Lili and Tim Ackland (2010). “The Prevalence of Playing-Related Musculoskeletal Disorders in Relation to Piano Players’ Playing Techniques and Practising Strategies”, Music Performance Research, vol. 3, no 1, pp. 61–78.

- Berman, Boris (2000). Notes from the Pianist’s Bench. Yale University Press.

- Bevilacqua, Frederic, Fabrice Guédy, Norbert Schnell, Emmanuel Fléty and Nicolas Leroy (2007). “Wireless Sensor Interface and Gesture-Follower for Music Pedagogy”, in Proceedings of the 2007 Conference on New Interfaces for Musical Expression, New York, pp. 124–129.

- Bragge, Peter (2006). “A Systematic Review Of Prevalence and Risk Factors Associated with Playing-Related Musculoskeletal Disorders in Pianists”, Occupational Medicine, vol. 56, no 1, pp. 28–38.

- Chung, In-Seol, Jaiyoung Ryu, Nobuke Ohnishi, Bruce Rowen and Jeff Headrich (1992). “Wrist Motion Analysis in Pianists”, Medical Problems of Performing Artists, vol. 7, no 1, pp. 1–5.

- Fraser, Alan (2011). The Craft of Piano Playing: A New Approach to Piano Technique, 2nd ed., Lanham, Maryland, Scarecrow Press.

- Furuya, Shinichi, Tomoko Aoki, Hidehiro Nakahara and Hiroshi Kinoshita (2012). “Individual Differences in the Biomechanical Effect of Loudness and Tempo on Upper-Limb Movements During Repetitive Piano Keystrokes”, Human Movement Science, vol. 31, no 1, pp. 26–39.

- Gillies, Marco, Harry Brenton, Matthew Yee-King, Andreu Grimalt-Reynes and Mark Inverno (2014). “Sketches vs Skeletons: Video Annotation Can Capture What Motion Capture Cannot”, in Proceedings of the Second International Workshop on Movement and Computing (MOCO 2015), New York, ACM, pp. 104–111.

- Keir, Peter J., Joel M. Bach, Mark Hudes and David M. Rempel (2007). “Guidelines for Wrist Posture Based on Carpal Tunnel Pressure Thresholds”, Human Factors, vol. 49, no 1, pp. 88–99.

- Kenny, Diana and Bronwen Ackermann (2016). “Performance-Related Musculoskeletal Pain, Depression and Music Performance Anxiety in Professional Orchestral Musicians: A Population Study”, Psychology of Music, vol. 43, no 1, pp. 43–60.

- Kim, David, Otmar Hilliges, Shahram Izadi, Alex Butler, Jiawen Chen, Iason Oikonomidis and Patrick Olivier (2012). “Digits: Freehand 3D Interactions Anywhere Using a Wrist-Worn Gloveless Sensor”, in Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology (UIST 2012), New York, ACM, pp. 167–176.

- Kinoshita, Hiroshi, Shinichi Furuya, Tomoko Aoki and Eckart Altenmuller (2007). “Loudness Control in Pianists as Exemplified in Keystroke Force Measurements on Different Touches”, Journal of the Acoustical Society of America, vol. 121, no 5, part 1, pp. 2959–2969.

- Lai, Kuan-Yin, Shyi-Kuen Wu, I-Ming Jou, Hsiao-Man Hsu, Mei-Jin C. Sea and Li-Chieh Kuo (2015). “Effects of Hand Span Size and Right-Left Hand Side on the Piano Playing Performances: Exploration of the Potential Risk Factors with Regard to Piano-Related Musculoskeletal Disorders”, International Journal of Industrial Ergonomics, vol. 50, pp. 97–104.

- Lee, Sang-Hie (2010). “Hand Biomechanics in Skilled Pianists Playing a Scale in Thirds”, Medical Problems of Performance Artists, vol. 25, no 4, pp. 167–174.

- MacRitchie, Jennifer (2015). “The Art and Science Behind Piano Touch: A Review Connecting Multi-Disciplinary Literature”, Musicae Scientiae, vol. 19, no 2, pp. 171–190.

- Mark, Thomas (2003). What Every Pianist Needs to Know about the Body. Chicago, G.I.A. Publications.

- Metcalf, Cheryl D., Thomas A. Irvine, Jennifer L. Sims, Yu L. Wang, Alvin W. Y. Su and David O. Norris (2014). “Complex Hand Dexterity: A Review of Biomechanical Methods for Measuring Musical Performance”, Frontiers in Psychology, vol. 5, no 414, doi: 10.3389/fpsyg.2014.00414.

- Ng, Kia (2008). “Interactive Feedbacks with Visualisation and Sonification for Technology-Enhanced Learning for Music Performance”, in Proceedings of the 26th Annual ACM International Conference on Design of Communication, New York, ACM, pp. 281–282.

- Oikawa, Naoki, Sadako Tsubota, Takako Chikenji, Gyoku Chin and Mitsuhiro Aoki (2011). “Wrist Positioning and Muscle Activities in the Wrist Extensor and Flexor During Piano Playing”, Hong Kong Journal of Occupational Therapy, vol. 21, no 1, pp. 41–46.

- Pak, Chong H. and Kris Chesky (2001). “Prevalence of Hand, Finger, and Wrist Musculoskeletal Problems in Keyboard Instrumentalists”, Medical Problems of Performing Artists, vol. 16, no 1, pp. 17–23.

- Rempel, David M., Peter J. Keir and Joel M. Bach (2008). “Effect of Wrist Posture on Carpal Tunnel Pressure while Typing”, Journal of Orthopaedic Research, vol. 26, no 9, pp. 1269–1273.

- Sakai, Naotaka (1992). “Hand Pain Related to Keyboard Techniques in Pianists”, Medical Problems of Performing Artists, vol. 7, no 2, pp. 63–65.

- Sakai, Naotaka, Michael C. Liu, Fong Chin Su, Allen T. Bishop and Kai Nan An (2006). “Hand Span and Digital Motion on the Keyboard: Concerns of Overuse Syndrome in Musicians”, Journal of Hand Surgery, vol. 31, no 5, pp. 830–835.

- Savvidou, Paola, Brad Willis, Mengyuan Li and Marjorie Skubic (2015). “Assessing Injury Risk in Pianists”, MTNA E-Journal, vol. 7, no 2, pp. 2–16.

- Schoonderwaldt, Erwin and Marcelo M. Wanderley (2007). “Visualization of Bowing Gestures for Feedback: The Hodgson Plot”, In Proceedings of the 3rd International Conference on Automated Production of Cross Media Content for Multi-channel Distribution (AXMEDIS07), vol. 2, pp. 65–70.

- Sharp, Toby, Cem Keskin, Duncan Robertson, Jonathan Taylor and Jamie Shotton (2015). “Accurate, Robust, and Flexible Real-time Hand Tracking”, in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI 2015), New York, ACM, pp. 3633–3642.

- Silva, Anabela G., Filipa M. B. Lã and Vera Afreixo (2015). “Pain Prevalence in Instrumental Musicians: A Systematic Review”, Medical Problems of Performing Artists, vol. 30, no 1, pp. 8–19.

- Treaster, Delia E. and William S. Marras (2000). “A Biomechanical Assessment of Alternate Keyboards Using Tendon Travel”, Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol. 44, no 6, pp. 685–688.

- Weyde, Tillman, Kia Ng, Kerstin Neubarth, Oliver Larkin, Thijs Koerselman and Bee Ong (2007). “A Systematic Approach to Music Performance Learning with Multimodal Technology”, paper presented at the Support E-Learning Conference, Quebec City, Canada.

- Wheatley-Brown, Michèle (2011). “An Analysis of Terminology Describing the Physical Aspect of Piano Technique”, unpublished doctoral thesis, University of Ottawa.

- Williamon, Aaron and Sam Thompson (2004). “Awareness and Incidence of Health Problems among Conservatoire Students”, Psychology of Music, vol. 34, no 4, pp. 411-430.

- Wristen, Brenda (2000). “Avoiding Piano-Related Injury: A Proposed Theoretical Procedure for Biomechanical Analysis of Piano Technique”, Medical Problems of Performing Artists, vol. 15, pp. 55–64.

- Wristen, Brenda, Myung-Chul Jung, A. K. G. Wismer and M. Susan Hallbeck (2006). “Assessment of Muscle Activity and Joint Angles in Small-Handed Pianists: A Pilot Study on the 7/8-Sized Keyboard versus the Full-Sized Keyboard”, Medical Problems of Performing Artists, vol. 21, no 1, pp. 3–9.

- Zaza, Christine (1998). “Playing-Related Musculoskeletal Disorders in Musicians: A Systematic Review of Incidence and Prevalence”, Canadian Medical Association Journal, vol. 158, no 8, pp. 1019–1025.

Liste des figures

Figure 1

Equation 1

Equation to calculate angle ABC (θ)

Figure 2

Figure 3

Figure 4

Figure 5

Two screenshots of the right wrist. i) The wrist is in a slightly extended position. The angle of extension falls within the threshold so the visualization of the data is green. ii) The wrist is in an overly extended position. The angle of extension exceeds the threshold so the visualization of the data is red.

Figure 6

Offline data visualization of a sample video stream. The first rail shows data for detection of the right hand and the second rail shows data for detection of the left hand. Values in green are those that fall below the threshold safety values of flexion/extension. Red values are those that exceed these thresholds.